Structure and Interpretation of Computer Programs

The LFE Edition

Harold Abelson and Gerald Jay Sussman with Julie Sussman

foreword by Alan J. Perlis

LFE translation by Duncan McGreggor

First Edition

The first edition of this book was comprised of a series of texts written by faculty of the Electrical Engineering and Computer Science Department at the Massachusetts Institute of Technology. It was edited and produced by The MIT Press under a joint production-distribution arrangement with the McGraw-Hill Book Company.

Ordering Information

North America

Text orders should be addressed to the McGraw-Hill Book Company.

All other orders should be addressed to The MIT Press.

Outside North America

All orders should be addressed to The MIT Press or its local distributor.

© 1996 by The Massachusetts Institute of Technology

Second Edition

All rights reserved. No part of this book may be reproduced in any form or by any electronic or mechanical means (including photocopying, recording, or information storage and retrieval) without permission in writing from the publisher.

This work is licensed under a Creative Commons Attribution-Noncommercial 3.0 Unported License.

This book was set by the authors using the LATEX typesetting system and was printed and bound in the United States of America.

Library of Congress Cataloging-in-Publication Data

Abelson, Harold

Structure and interpretation of computer programs /

Harold Abelson and Gerald Jay Sussman, with Julie Sussman. --

2nd ed.

p. cm. -- (Electrical engineering and computer science series)

Includes bibliographical references and index.

ISBN 0-262-01153-0 (MIT Press hardcover)

ISBN 0-262-51087-1 (MIT Press paperback)

ISBN 0-07-000484-6 (McGraw-Hill hardcover)

1. Electronic digital computers -- Programming.

2. LISP (Computer program language)

I. Sussman, Gerald Jay.

II. Sussman, Julie.

III. Title.

IV. Series: MIT electrical engineering and

computer science series.

QA76.6.A255 1996

005.13'3 -- dc20 96-17756

Fourth printing, 1999

LFE Edition

© 2015-2020 by Duncan McGreggor

This work is licensed under a Creative Commons Attribution-Noncommercial 3.0 Unported License.

About

This Gitbook (available here) is a work in progress, converting the MIT classic Structure and Interpretation of Computer Programs to Lisp Flavored Erlang. We are forever indebted to Harold Abelson, Gerald Jay Sussman, and Julie Sussman for their labor of love and intelligence. Needless to say, our gratitude also extends to the MIT press for their generosity in licensing this work as Creative Commons.

Contributing

This is a huge project, and we can use your help! Got an idea? Found a bug? Let us know!.

Building the Book

To build a local copy of the book, install the dependencies:

$ make deps

On Linux, you'll need to run that with sudo.

Then install the gitbook modules:

$ make setup

Finally, build the book:

$ make book

Dedication

This book is dedicated, in respect and admiration, to the spirit that lives in the computer.

I think that it's extraordinarily important that we in computer science keep fun in computing. When it started out, it was an awful lot of fun. Of course, the paying customers got shafted every now and then, and after a while we began to take their complaints seriously. We began to feel as if we really were responsible for the successful, error-free perfect use of these machines. I don't think we are. I think we're responsible for stretching them, setting them off in new directions, and keeping fun in the house. I hope the field of computer science never loses its sense of fun. Above all, I hope we don't become missionaries. Don't feel as if you're Bible salesmen. The world has too many of those already. What you know about computing other people will learn. Don't feel as if the key to successful computing is only in your hands. What's in your hands, I think and hope, is intelligence: the ability to see the machine as more than when you were first led up to it, that you can make it more.

-- Alan J. Perlis (April 1, 1922 - February 7, 1990)

Foreword

Educators, generals, dieticians, psychologists, and parents program. Armies, students, and some societies are programmed. An assault on large problems employs a succession of programs, most of which spring into existence en route. These programs are rife with issues that appear to be particular to the problem at hand. To appreciate programming as an intellectual activity in its own right you must turn to computer programming; you must read and write computer programs -- many of them. It doesn't matter much what the programs are about or what applications they serve. What does matter is how well they perform and how smoothly they fit with other programs in the creation of still greater programs. The programmer must seek both perfection of part and adequacy of collection. In this book the use of "program" is focused on the creation, execution, and study of programs written in a dialect of Lisp for execution on a digital computer. Using Lisp we restrict or limit not what we may program, but only the notation for our program descriptions.

Our traffic with the subject matter of this book involves us with three foci of phenomena: the human mind, collections of computer programs, and the computer. Every computer program is a model, hatched in the mind, of a real or mental process. These processes, arising from human experience and thought, are huge in number, intricate in detail, and at any time only partially understood. They are modeled to our permanent satisfaction rarely by our computer programs. Thus even though our programs are carefully handcrafted discrete collections of symbols, mosaics of interlocking functions, they continually evolve: we change them as our perception of the model deepens, enlarges, generalizes until the model ultimately attains a metastable place within still another model with which we struggle. The source of the exhilaration associated with computer programming is the continual unfolding within the mind and on the computer of mechanisms expressed as programs and the explosion of perception they generate. If art interprets our dreams, the computer executes them in the guise of programs!

For all its power, the computer is a harsh taskmaster. Its programs must be correct, and what we wish to say must be said accurately in every detail. As in every other symbolic activity, we become convinced of program truth through argument. Lisp itself can be assigned a semantics (another model, by the way), and if a program's function can be specified, say, in the predicate calculus, the proof methods of logic can be used to make an acceptable correctness argument. Unfortunately, as programs get large and complicated, as they almost always do, the adequacy, consistency, and correctness of the specifications themselves become open to doubt, so that complete formal arguments of correctness seldom accompany large programs. Since large programs grow from small ones, it is crucial that we develop an arsenal of standard program structures of whose correctness we have become sure -- we call them idioms -- and learn to combine them into larger structures using organizational techniques of proven value. These techniques are treated at length in this book, and understanding them is essential to participation in the Promethean enterprise called programming. More than anything else, the uncovering and mastery of powerful organizational techniques accelerates our ability to create large, significant programs. Conversely, since writing large programs is very taxing, we are stimulated to invent new methods of reducing the mass of function and detail to be fitted into large programs.

Unlike programs, computers must obey the laws of physics. If they wish to perform rapidly -- a few nanoseconds per state change -- they must transmit electrons only small distances (at most 1 1/2 feet). The heat generated by the huge number of devices so concentrated in space has to be removed. An exquisite engineering art has been developed balancing between multiplicity of function and density of devices. In any event, hardware always operates at a level more primitive than that at which we care to program. The processes that transform our Lisp programs to "machine" programs are themselves abstract models which we program. Their study and creation give a great deal of insight into the organizational programs associated with programming arbitrary models. Of course the computer itself can be so modeled. Think of it: the behavior of the smallest physical switching element is modeled by quantum mechanics described by differential equations whose detailed behavior is captured by numerical approximations represented in computer programs executing on computers composed of ...!

It is not merely a matter of tactical convenience to separately identify the three foci. Even though, as they say, it's all in the head, this logical separation induces an acceleration of symbolic traffic between these foci whose richness, vitality, and potential is exceeded in human experience only by the evolution of life itself. At best, relationships between the foci are metastable. The computers are never large enough or fast enough. Each breakthrough in hardware technology leads to more massive programming enterprises, new organizational principles, and an enrichment of abstract models. Every reader should ask himself periodically "Toward what end, toward what end?" -- but do not ask it too often lest you pass up the fun of programming for the constipation of bittersweet philosophy.

Among the programs we write, some (but never enough) perform a precise mathematical function such as sorting or finding the maximum of a sequence of numbers, determining primality, or finding the square root. We call such programs algorithms, and a great deal is known of their optimal behavior, particularly with respect to the two important parameters of execution time and data storage requirements. A programmer should acquire good algorithms and idioms. Even though some programs resist precise specifications, it is the responsibility of the programmer to estimate, and always to attempt to improve, their performance.

Lisp is a survivor, having been in use for about a quarter of a century. Among the active programming languages only Fortran has had a longer life. Both languages have supported the programming needs of important areas of application, Fortran for scientific and engineering computation and Lisp for artificial intelligence. These two areas continue to be important, and their programmers are so devoted to these two languages that Lisp and Fortran may well continue in active use for at least another quarter-century.

Lisp changes. The Scheme dialect used in this text has evolved from the original Lisp and differs from the latter in several important ways, including static scoping for variable binding and permitting functions to yield functions as values. In its semantic structure Scheme is as closely akin to Algol 60 as to early Lisps. Algol 60, never to be an active language again, lives on in the genes of Scheme and Pascal. It would be difficult to find two languages that are the communicating coin of two more different cultures than those gathered around these two languages. Pascal is for building pyramids -- imposing, breathtaking, static structures built by armies pushing heavy blocks into place. Lisp is for building organisms -- imposing, breathtaking, dynamic structures built by squads fitting fluctuating myriads of simpler organisms into place. The organizing principles used are the same in both cases, except for one extraordinarily important difference: The discretionary exportable functionality entrusted to the individual Lisp programmer is more than an order of magnitude greater than that to be found within Pascal enterprises. Lisp programs inflate libraries with functions whose utility transcends the application that produced them. The list, Lisp's native data structure, is largely responsible for such growth of utility. The simple structure and natural applicability of lists are reflected in functions that are amazingly nonidiosyncratic. In Pascal the plethora of declarable data structures induces a specialization within functions that inhibits and penalizes casual cooperation. It is better to have 100 functions operate on one data structure than to have 10 functions operate on 10 data structures. As a result the pyramid must stand unchanged for a millennium; the organism must evolve or perish.

To illustrate this difference, compare the treatment of material and exercises within this book with that in any first-course text using Pascal. Do not labor under the illusion that this is a text digestible at MIT only, peculiar to the breed found there. It is precisely what a serious book on programming Lisp must be, no matter who the student is or where it is used.

Note that this is a text about programming, unlike most Lisp books, which are used as a preparation for work in artificial intelligence. After all, the critical programming concerns of software engineering and artificial intelligence tend to coalesce as the systems under investigation become larger. This explains why there is such growing interest in Lisp outside of artificial intelligence.

As one would expect from its goals, artificial intelligence research generates many significant programming problems. In other programming cultures this spate of problems spawns new languages. Indeed, in any very large programming task a useful organizing principle is to control and isolate traffic within the task modules via the invention of language. These languages tend to become less primitive as one approaches the boundaries of the system where we humans interact most often. As a result, such systems contain complex language-processing functions replicated many times. Lisp has such a simple syntax and semantics that parsing can be treated as an elementary task. Thus parsing technology plays almost no role in Lisp programs, and the construction of language processors is rarely an impediment to the rate of growth and change of large Lisp systems. Finally, it is this very simplicity of syntax and semantics that is responsible for the burden and freedom borne by all Lisp programmers. No Lisp program of any size beyond a few lines can be written without being saturated with discretionary functions. Invent and fit; have fits and reinvent! We toast the Lisp programmer who pens his thoughts within nests of parentheses.

Alan J. Perlis

New Haven, Connecticut

Preface to the LFE Edition

Unbound creativity is the power and the weakness of the Force. The Art of Programming Well lies in forging a balance between endless possibilities and strict discipline.

--Cristina Videira Lopes, "Jedi Masters", on the history of Lisp and programming

In the spirit of Alan Perlis' "keeping fun in computing" and Cristina Lopes' entreaty for creativity bounded by the practical, the preface to the LFE edition of this book will cover the following topics:

- A Tale of Lisp Not Often Told

- The Origins of Erlang and LFE

- The Place of Lisp in the 21st Century

- Changes from the Second Edition

- Source Code for This Book

The Hidden Origins of Lisp

Beginnings are important. They may not fully dictate the trajectory of their antecedents, yet it does seem they do have a profound impact on the character of their effects. For the human observer, beginnings are also a source of inspiration: good beginnings lend a strength of purpose, the possibility of greater good. The story of Lisp has a good beginning -- several of them, in fact -- closely tied to the theories of numbers, mathematical logic, functions and types as well as that of computing itself.

At their root, the histories of programming languages spring from, on one hand, the practical considerations of engineering and developer experience, and on the other hand, the principle of computability. This, in turn, ultimately traces its beginnings to the fundamental concepts of arithmetic and mathematical logic: what are numbers and how to we define them rigorously? These questions were asked and considered -- sometimes from a fairly vague philosophical perspective -- by great minds such as Leibniz (later 1600s; drafts published posthumously), Boole (1847), Grassmann (1861), Peirce (1881), Frege (1884), and Dedekind (1888). It was the Italian mathematician Giuseppe Peano, though, who in 1889 finally identified and distilled the essence of these explorations in terms that were more precisely formulated than those of his peers or intellectual forebearers. These were subsequently elaborated by successive generations of mathematicians prior to the advent of "high-level" programming languages in the 1950s.

Histories are complicated; complete ones are impossible and readable ones are necessarily limited and lacking in details. In our particular case, there is a complex lineage of mathematics leading to Lisp. However, for the sake of clarity and due to this being a preface and not a book in its own right, the mathematical and computational history leading to Lisp has been greatly simplified below. The four dominant historical figures discussed provide distinct insights and represent corresponding themes as mathematics evolved unwittingly toward a support for computing. Due to the limitation of scope, however, it might be better to view these as archetypes of mathematical discovery rather than historical figures one might come to know when reading a full history. Of the many themes one could discern and extract from these great minds, we focus on the following:

- Understanding and defining the underpinnings of arithmetic and logic ("What are numbers? What is counting?")

- Attempting to formally unify all of mathematics in a consistent framework of logic ("Can I express all of math in discrete logical assertions and statements?")

- Formally defining algorithms and computability ("Is there a procedure that can take any precise mathematical statement and decide whether the statement is true or false?")

- Creating the means by which symbolic computation and artificial reasoning could be made manifest ("Can we make machines solve problems that are usually considered to require intelligence?")1

Each major topic above depended -- in one form or another -- upon the preceding topic, and the four famous mathematicans listed below embodied each of these themes. Small excerpts from their lives and work are shared as believed to have impacted the course of events that lead to Lisp's inception.

An almost word-for-word quote from John McCarthy's January 1962 submission in the quarterly progress report for MIT's RLE, titled XXI. ARTIFICIAL INTELLIGENCE, page 189 on the original hard copy. The table of contents for the original is available here.

Giuseppe Peano

Figure P.1: Giuseppe Peano, circa 1910.

Giuseppe Peano was born 100 years before Lisp, in August of 1858 at his family's farmhouse in the north of Italy. At a young age he was recognized as having an exceptionally quick mind and, through the favor of an uncle, obtained a good early education in Turin where he not only stayed for university, but for the entirety of his career.

After graduating from the University of Turin with high honors, Peano was asked to stay on, providing assistance with the teaching responsibilities of the mathematics department. Within a few years, he began tackling problems in logic and exploring the foundations of the formal philosophy of mathematics. During this time, Peano introduced the world to his now-famous axioms.1, 2 In particular, the fifth axiom is considered the first definition of primitive recursive functions.3 In this same work Peano described the function of a variable with explicit recursive substituion.4 Both of these served as a great source of inspiration and insight to later generations.

From this point into the beginning of the 20th century, Peano was considered one of the leading figures in mathematical logic, alongside Frege and Russell. This was due to Peano's work on and advocacy for a unified formulation of mathematics cast in logic. Entitled Formulario Mathematico, it was first published in 1895, with multiple editions released between then and the last edition in 1908. Each subsequent edition was essentially a new work in its own right, with more finely honed formulas, presentation, and explanation wherein he shared his symbols for logic, a new mathematical syntax.

In 1897 at the First International Congress of Mathematicians in Zurich, Peano co-chaired the track on "Arithmetic and Algebra" and was invited to deliver a keynote on logic. Between that event and its successor in 1900, he published more of his work on the Formulario. By these and other activities, when Peano arrived in Paris for the international congresses of both mathematics and philosophy, he was at the peak of his career in general, and the height of his development of mathematical logic in particular. At this event Peano along with Burali-Forti, Padoa, Pieri, Vailati, and Vacca were said to have been "supreme" and to have "absolutely dominated" the discussions in the field of the philosophy of sciences.5

Bertrand Russell was present at the first of these congresses and was so completely taken with the efficacy of Peano's approach to logic that upon receiving from Peano his collected works, he returned home to study them instead of remaining in Paris for the Mathematical Congress. A few months later he wrote to Peano, attaching a manuscript detailing the assessments he had been able to make, thanks to his recent and thorough study of Peano's works. Peano responded to him the following March congratulating Russell on "the facility and precision" with which he managed Peano's logical symbols; Peano published Russell's paper that July. However, this was only the beginning for Russell: the baton had been firmly passed to him and the advance towards a theory of computation had taken its next step.

This was in Peano's book of 1889 "Arithmetices principia, nova methodo exposita" (in English, The principles of arithmetic, presented by a new method).

In addition it was in this same period of time that Peano started creating various logic and set notations that are still in use today.

See Robert I. Soare's 1995 paper entitled "Computability and Recursion", page 5.

See the 2006 paper "History of Lambda-calculus and Combinatory Logic" by Felice Cardone and J. Roger Hindley, page 2.

See page 91 of Hubert C. Kennedy's 1980 hardcover edition of "Peano: Life and Works of Giuseppe Peano", Volume 4 of the "Studies in the History of Modern Science."

Bertrand Russell

Figure P.2: Bertrand Russell, 1958.

Bertrand Russell was born in 1872 into a family of the British aristocracy. His early life was colored with tragedy: by the time he was six years old, he had lost his mother, sister, father, and grandfather. He was a deeply pensive child naturally inclined towards philosophical topics, and by 1883 -- at the age of 11 -- he was set upon the path for the first half of his life. It was at this time that his brother was tutoring him on Euclid's geometry:

"This was one of the great events of my life, as dazzling as first love. I had not imagined that there was anything so delicious in the world. After I had learned the fifth proposition, my brother told me that it was generally considered difficult, but I had found no difficulty whatever. This was the first time it had dawned upon me that I might have some intelligence. From that moment until Whitehead and I finished Principia ... mathematics was my chief interest, and my chief source of happiness."1

Russell continues in his biography, sharing how this time also provided the initial impetus toward the Principia Mathematica:

"I had been told that Euclid proved things, and was much disappointed that he started with axioms. At first I refused to accept them unless my brother could offer me some reason for doing so, but he said: 'If you don't accept them we cannot go on', and as I wished to go on, I reluctantly admitted them pro tem. The doubt as to the premisses of mathematics which I felt at that moment remained with me, and determined the course of my subsequent work."

In 1900, Russell attended the First International Conference of Philosophy where he had been invited to read a paper. In his autobiography, he describes this fateful event:

"The Congress was a turning point in my intellectual life, because I there met Peano. I already knew him by name and had seen some of his work, but had not taken the trouble to master his notation. In discussions at the Congress I observed that he was more precise than anyone else, and that he invariably got the better of any argument upon which he embarked. As the days went by, I decided that this must be owing to his mathematical logic. I therefore got him to give me all his works, and as soon as the Congress was over I retired to Fernhurst to study quietly every word written by him and his disciples. It became clear to me that his notation afforded an instrument of logical analysis such as I had been seeking for years, and that by studying him I was acquiring a new powerful technique for the work that I had long wanted to do. By the end of August I had become completely familiar with all the work of his school. I spent September in extending his methods to the logic of relations. It seemed to me in retrospect that, through that month, every day was warm and sunny. The Whiteheads stayed with us at Fernhurst, and I explained my new ideas to him. Every evening the discussion ended with some difficulty, and every morning I found that the difficulty of the previous evening had solved itself while I slept. The time was one of intellectual intoxication. My sensations resembled those one has after climbing a mountain in a mist when, on reaching the summit, the mist suddenly clears, and the country becomes visible for forty miles in every direction. For years I had been endeavoring to analyse the fundamental notions of mathematics, such as order and cardinal numbers. Suddenly, in the space of a few weeks, I discovered what appeared to be definitive answers to the problems which had baffled me for years. And in the course of discovering these answers, I was introducing a new mathematical technique, by which regions formerly abandoned to the vaguenesses of philosophers were conquered for the precision of exact formulae. Intellectually, the month of September 1900 was the highest point of my life."2

Russell sent an early edition of the Principia to Peano after working on it for three years. A biographer of Peano noted that he "immediately recognized it's value ... and wrote that the book 'marks an epoch in the field of philosophy of mathematics.'" 3 Over the course of remaining decade, Russell and Whitehead continued to collaborate on the Principia, a work that ultimately inspired Gödel's incompleteness theorems and Church's $$\lambda$$-calculus.

The 1998 reissued hardback "Autobiography" of Bertrand Russell, pages 30 and 31.

Ibid., page 147.

Kennedy, page 105-106.

Alonzo Church

Figure P.3: Alonzo Church, 1943.

Alonzo Church was born in Washington, D.C. in 1903.1 His great-grandfather (originally from Vermont) was not only a professor of mathematics and astronomy at the University of Georgia, but later became its president.2 Church graduated from a Connecticut prep-school in 1920 and then enrolled in Princeton to study mathematics. He published his first paper as an undergraduate and then continued at Princeton, earning his Ph.D. in just three years.

While a graduate student, Church was hit by a trolley car and spent time in a hospital where he met Julia Kuczinski3 -- they were married a year later and remained inseparable until her death, 51 years later. Church had a reputation for being a bit quirky: he never drove a car or typed; he was extremely neat and fastidious; he walked everywhere and often hummed to himself while he did so; he loved reading science fiction magazines;4 a nightowl, he often did his best work late at night. Though he had solitary work habits, his list of Ph.D. students is impressive, including the likes of Turing, Kleene, and Rosser.

Perhaps one of Church's more defining characteristics was his drive: he deliberately focused on prominent problems in mathematics and attacked them with great force of will. A few of the problems he had focused on in the early 1930s were:

- Known paradoxes entailed by Bertrand Russell's theory of types 5

- David Hilbert's Entscheidungsproblem, and

- The implications of Gödel's completeness theorem.

These were some of the most compelling challenges in mathematics at that time. All of them ended up meeting at the cross-roads of the λ‑calculus.

Church had started working on the λ‑calculus when attempting to address the Russell Paradox 6. However, it was not that goal toward which the λ‑calculus was ultimately applied. Instead, it became useful -- essential, even -- in his efforts to define what he called "calculability" and what is now more commonly referred to as computability.7 In this the λ‑calculus was an unparalleled success, allowing Church to solve the Entscheidungsproblem using the concept of recursive functions.

Syntactically, Church's λ‑notation made a significant improvement upon that found in the Principia Mathematica 8. Given the Principia phrase $$\phi x̂$$ and the λ‑calculus equivalent, $$\lambda x \phi x$$, one benefits from the use of the latter by virtue of the fact that it unambiguously states that the variable $$x$$ is bound by the term-forming operator $$\lambda$$. This innovation was necessary for Church's work and a powerful tool that was put to use by John McCarthy when he built the first programming language which used the λ‑calculus: Lisp.

The majority of the material for this section has been adapted from the Introduction to the Collected Works of Alonzo Church, MIT Press (not yet published).

This was when the University of Georgia was still called Franklin College.

She was there in training to become a nurse.

He would also write letters to the editors when the science fiction writers got their science wrong.

These complications were known and discussed by Russell himself at the time of Principia's publication.

See Russell's paradox.

"Computability" was the term which Turing used.

See the discussion of "Propositional Functions" in the section "The Notation in Principia Mathematica": http://plato.stanford.edu/entries/pm-notation/#4. Note that the section of the Principia Mathematica which they reference in that linked discussion on the Stanford site is at the beginning of "Section B: Theory of Apparent Variables" in the Principia.

John McCarthy

Figure P.4: John McCarthy, 1965.

John McCarthy was born in 1927, in the city of Boston. Due to difficulties finding work during the Great Depression, the family moved to New York, then finally settled in Los Angeles. Having established an early aptitude and proficiency in mathematics, McCarthy skipped two years of math upon his enrollment at Caltech. The year he received his undergraduate degree, he attended the 1948 Hixon Symposium on Cerebral Mechanisms in Behavior. The speakers at the symposium represented an intersection of mathematics, computation, and psychology. They were as follows:

- Professor Ward C. Halstead, University of Chicago

- Professor Heinrich Kluver, University of Chicago

- Professor Wolfgang Kohler , Swarthmore College

- Professor K. S. Lashley, Harvard University

- Dr. R. Lorente de No, Rockefeller Institute for Medical Research

- Professor Warren S. Mc Culloch, University of Illinois

- Dr. John von Neumann, Institute for Advanced Study

At the symposium John von Neumann presented his paper "The General and Logical Theory of Automata",1 after which McCarthy became intrigued with the idea of developing machines that could think as people do. McCarthy remained at Caltech for one year of grad school, but then pursed the remainder of his Ph.D. at Princeton, considered by him to be the greater institution for the study of mathematics. In discussions with an enthusiastic von Neumann at Princeton, McCarthy shared his ideas about interacting finite automata -- ideas inspired by von Neumann's talk at the Hixon Symposium.

After completing his Ph.D. dissertation, Claude Shannon invited McCarthy and his friend Marvin Minksy to work at Bell Labs in New Jersey for the summer. McCarthy and Shannon collaborated on assembling a volume of papers entitled "Automata Studies," thought ultimately a bit of a disappointment to McCarthy since so few submissions concerned the topic of his primary interest: machine intelligence. A few years later, he had the opportunity to address this by proposing a summer research project which he and the head of IBM's Information Research pitched to Shannon and Minksy. They agreed, and a year later held the first Artificial Intelligence workshop at the Dartmouth campus in New Hampshire.

It was here, thanks to Allen Newell and Herb Simon, that McCarthy was exposed to the idea of list processing for a "logical language" Newell and Simon were working on (later named IPL). McCarthy initially had high hopes for this effort but upon seeing that its implementation borrowed heavily from assembly, he gave up on it. That, in conjunction with his inability to gain any traction with the maintainers of FORTRAN for the support of recursion or conditionals, inspired him to create a language that suited his goals of exploring machine intelligence. With the seeds of Lisp sown in 1956, it was two more years before development of Lisp began. Two years later a special project was established to carry out this work under the auspices of the MIT Research Laboratory of Electronics which granted McCarthy and his team one room, one secretary, two programmers, a key punch and six grad students.2 The MIT AI project was founded and the work of creating Lisp was begun.

A transcript of the talk is available in Volume V of John von Neumann "Collected Works". The topics covered were as follows: 1. Preliminary Considerations; 2. Discussion of Certain Relevant Traits of Computing Machines; 3. Comparisons Between Computing Machines And Living Organisms; 4. The Future Logical Theory of Automata; 5. Principles of Digitalization; 6. Formal Neural Networks; and 7. The Concept of Complication and Self-Reproduction. The talk concluded with an intensive period of question and answer, also recorded in the above-mentioned volume.

Marvin Minsky and John McCarthy founded the MIT AI Lab together when McCarthy caught the acting head of the department, Jerome Wiesner, in the hallway and asked him permission to do it. Wiesner responded with "Well, what do you need?”. When McCarthy gave him the list, Wiesner added "How about 6 graduate students?" as the department had agreed to support six mathematics students, but had yet to find work for them. See On John McCarthy’s 80th Birthday, in Honor of his Contributions, page 3.

A Recap of Erlang's Genesis

Though the LFE edition of Structure and Interpretation of Computer Programs is a reworking of the Scheme original to LFE and while both version focus entirely upon Lisp, we would be remiss if a brief history of Erlang -- upon which LFE firmly rests -- was not covered as well. One of the most concise and informative sources of Erlang history is the paper that Joe Armstrong wrote1 for the third History of Programming Languages2 conference.

What evolved into Erlang started out as the simple task of "solving Ericsson's software problem." 3 Practically, this involved a series of initial experiments in programming simple telephony systems in a variety of languages. The results of this, namely as follows, fueled the next round of experiments:

- Small languages seemed better at succinctly addressing the problem space.

- The functional programming paradigm was appreciated, if sometimes viewed as awkward.

- Logic programming provided the most elegant solutions in the given problem space.

- Support for concurrency was viewed as essential.

Joe Armstrong's first attempts at Erlang were done in 1985 using the Smalltalk programming language. He switched away from this after Roger Skagervall observed that the logic Joe had developed was really just thinly veiled Prolog. The development of a robust systems programming language for telephony was further refined with advice from Mike Williams, already a veteran in programming concurrent systems. He said that for a concurrent programming language to be efficient it had to keep in mind three key things that dominated its problem space: 4

- The time needed to create a process

- The time required to perform a context switch between two processes

- The amount of time taken to copy a message from one process to another

Furthermore, Bjarne Däcker defined the following capabilities for a language to be useful when programming telecommunications switches:

- To handle high-concurrency

- To handle soft real-time

- To support non-local, distributed computing

- To enable hardware interaction

- To support very large scale software systems

- To support complex interactions

- To provide non-stop operation (on the order of years)

- To allow for system updates without downtime

- To provide high-nines reliability

- To provide fault-tolerance for both hardware and software

With these guiding principles, Erlang in its Prolog form emerged over the course of 1986 and 1987. Robert Virding joined Armstrong in this effort during this time period, 5 helping with such tasks as rewriting the initial prototype and improving overall concurrency performance.

See Armstrong's paper here.

HOPL III was held in San Diego, early June of 2007. From the perspective of LFE, it is interesting to note that each language comprising its essence have been covered at HOPL conferences: 1. HOPL I, 1978 John McCarthy presented "History of Lisp"; 2. HOPL II, 1993 Guy L. Steele, Jr. and Richard P. Gabriel presented "The Evolution of Lisp," a continuation of McCarthy's 1978 talk; 3. HOPL III, 2007 Joe Armstrong presented "A History of Erlang."

Armstrong 2007, page 3.

Ibid., page 2.

"Robert Virding and Joe Armstrong - The History of the Erlang Virtual Machine", Erlang Factory 2010, London.

The Inspiration for LFE

It wasn't until 2007 that, after 20 years of contributions to Erlang, that Robert Virding decided to start experimenting with a Lisp running on the Erlang VM. 1 Initially explored as a Lisp 1, Virding switched to separating the name spaces for functions and variables more like the Lisp 2 of Common Lisp, having determined that this was a better overall fit with the internals of the Erlang virtual machine. 2

After over 7 years of development, LFE now supports such features as:

- Non-hygienic macros

- Various forms borrowed from Scheme, Maclisp, and Common Lisp

- A REPL which allows for the definition of functions, records, and macros 3

- Immutable data

- Pattern matching

- Modules

- Functional programming paradigm

- Erlang data types

- Light-weight language processes

- Hot-loading of code on running systems

- 100% compatibility with Core Erlang and OTP

As to its own origins, Virding shared the following as the primary motivating factors: 4

- He was an "old Lisper" and was therefore quite interested in implementing a Lisp.

- He was curious as to what a Lisp on the Erlang VM would look like and how it would run. (It had always been a goal of his to make a Lisp which was specially designed for running on the Erlang VM and able to fully interact with Erlang/OTP.)

- He wanted to experiment with compiling a language from Core Erlang. 5

- He was looking for some interesting programming projects that were not too large to do in his spare time.

- He likes implementing languages.

- He also thought it would be a fun problem to solve: it was an open-ended problem with lots of interesting parts.

Once again, the spirit of exploration conspired with good, clean fun to bring something new and interesting into the world while at the same time reflecting a rich and varied history. On the one hand, LFE has a Lisp heritage stretching back through the $$\lambda$$-Calculus to Peano. On the other, it is a systems programming language benefiting from Prolog vestiges such as pattern-matching and list comprehensions. Hardened through industrial use, it rests upon a VM which powers 40% of the worlds telecommunications traffic. 6 This is a potentially powerful combination offering unique capabilities the distributed systems metaprogrammer.

Thread on the LFE mail list entitled LFE History

LFE also distinguishes between functions based on arity, thus it is regularly jokingly referred to as a Lisp 2+.

This is in stark contrast to the Erlang shell which does not allow the developer to define functions (except as anonymous ones assigned to a variable); Erlang also does not have Lisp-style macros.

He shared this information on the LFE mail list in response to a query regarding the inspiration for LFE. See the original thread.

Core Erlang had been adopted by the Erlang compiler in 2001. See Carlsson's paper from that year, "An introduction to Core Erlang", page 2.

This is something often quoted in Erlang marketing materials; the number is based upon the market share Ericsson has in deployed systems world-wide.

The Place of Lisp in the 21st Century

The ups-and-downs of Lisp since its inception in 1958 have been covered in various media since the late 1970s. While Lisp continues to have its supporters and detractors, one thing is abundantly clear: many of the characteristics initially touted as making Lisp unique and powerful are now shared by a vast majority of modern programming languages. By some, this is considered the greatest triumph of Lisp, a source of inspiration for much of modern computing. The inevitable question is then asked: what use is Lisp, more than 50 years after its creation, when the world of computing -- both research and industry -- are so vastly different from what they were in Lisp's early days?

The first answer usually given is one that requires very little thought: macros. There are numerous books written on this topic and we will not cover it further in this preface, but accept as a given that the support of Lisp-style macros in any programming language is a powerful tool. Once we get past the obvious answer, subtler ones come to the fore. For instance, the simplicity of the syntax and similarity to parenthetically grouped algebra expressions make for an easy programming introduction to students of a middle school age. This simplicity is also something offering great insights to experienced programmers. Alan Kay's famous quote of Lisp being the software equivalent of Maxwell's famous partial differential equations for classical electrodynamics1 derives its inspiration from this simplicity: one can fit the essence of the language in one's head or on a single sheet of paper.2

The education point is important: if we cannot teach the basics of a science or a branch of mathematics -- regardless of how profound it may be -- it has ceased to become a science and should at that point be considered a superstition or cargo cult, with its practitioners engaged in a great deal of activity (or even lucrative commerce) but having no understanding of the principles which form the basis of their work. However, to be a compelling focus of study, the value of Lisp in the 21st century most hold more than simply the promise of clarity and the means by which one might create domain-specific languages. To be genuinely pertinent, it must reach beyond the past and the present to provide keys to undiscovered doors for each new generation of intrepid programmers.

And here the answer arrives, not as some astounding epiphany, but again in humble simplicity: Lisp's fun and its beauty rest not only in its syntactic elegance but in its power of expression. This is specifically important for the adventurer: if you want to create something new, explore some new programmatical territory, you need tools at your fingertips which will allow you to do so flexibly and quickly, with as little overhead as possible. Otherwise the moment of inspiration can be to quickly lost, the creative process swallowed in a mire too heavy with infrastructure and process. By putting the power of Lisps into the hands of each generation's aspiring programmers, we are ensuring that they have what is necessary to accomplish feats which might seem miraculous to us should we see them now -- as genuinely new ideas often appear (when appreciated). A world that sees the rise of quantum computing or the molecular programming of nano-scale machines or as yet undreamt technological capabilities, will need programmers who have the ability to iterate quickly and try out new ideas, easily able to see that which should be abandoned and that which should be taken up. This is especially important for the survival of free software: as long as our societies are able to produce languages, software, and systems which individuals or small groups may attain understanding and mastery over, software freedom will prevail. Systems that are so complex as to require an industry to manage them are no longer within the domain of motivated and curious individuals, but rather that of organizations with sufficient capital to maintain the necessary infrastructure.

Thus, as we point our technological society towards its future with each action we take, as individuals and as a group, we have a responsibility to maintain the tools which will ensure the freedom of future generations, the basic freedom of the tool-maker, the hacker, the artist, and the poet. Lisp is not the only answer to this particular need, but it has shown its strengths in this regard over the past 50 years, and if the last 10 years of re-discovery and innovation in the world of programming is any indication, Lisp is alive and well and will likely be with us for a long time to come.

And there will be even more fun to be had by all.

See the ACM-hosted interview with Alan Kay.

Or, as the case may be, the lower $$\frac{2}{3}$$rds of a single page.

Notes on Changes from the Original

The original text of Structure and Interpretation of Computer Programs was published in the 80s, but has material in it preceding even that time, dating back to courses taught at MIT in the 60s. Though the essence of what was taught in those lecture halls -- and with the original SICP text -- remains just as relevant today, much context has changed in the field of computing since then. As such, some changes in a new edition are to be expected.

Furthermore, this edition of SICP is an adaptation for a different programming language -- though still a Lisp -- whose syntax differs in varying degrees. More than syntax, though, LFE is built upon a very different VM, one whose focus is on such concerns as fault-tolerance, massive scale, and reliability.

As such, we have taken liberties in our edition of SICP, hopefully with little to no impact on its essence. These liberties include the following:

- The Lisp-2 syntax of LFE is used instead of the Lisp-1 syntax of a Scheme.

- As an extension of that first point, features such as pattern matching in function heads, guards, multi-arity functions, etc., are used extensively, often leading to more concise functions that their original, Scheme counterparts.

- When pertinent, features from LFE and Erlang are introduced in conjunction with the subject matter of the original text.

- Very rarely some of the original text or footnotes might be omitted when not at all applicable to LFE.

- LFE doesn't support nested

defuns like Scheme supports nesteddefines;fletandflectrecare used instead. However, as a result, these are introduced beforelet. This is awkward, but not disastrous. - The LFE Edition uses LaTeX, and as such, equations are much easier to read.

- Updating examples to modern, accepted forms (e.g., the Ackermann function).

- Exercises are broken out into their own sections (while preserving their original order and placement in the chapters).

Some of the terminology in the text has also been changed to maintain consistency with that used by the Erlang and LFE community. Most prominently, readers of the original text will notice that we do not use the term "procedure" but have instead opted for the more common term "function."

Obtaining the Book and Related Code

The source code for the LFE edition of this book uses the same license as the original: Creative Commons Attribution-Noncommercial 3.0 Unported License.

Book Source

There are two related sources available for this book:

- The original text for the second edition is available on the MIT Press site.

- The source code for the LFE edition (mostly Markdown) is available on Github.

You may obtain a copy of the source code via git:

$ git clone https://github.com/lfe/sicp.git

or direct download: https://github.com/lfe/sicp/archive/master.zip.

Code Used in the Book

Each chapter of this book defines functions and uses code from the previous chapters. The interactive LFE REPL sessions are saved as files in the book repository. They are available in each chapter directory as code.lfe.

Running the Code in the Book

To follow along in each chapter or to run the code that has been provided, you will need the following:

- A recent version of Erlang (the code in this book was tested with Erlang 17.4)

- LFE

The download and installation of Erlang is not covered in this book; information for that is available from many other sources with the kind help of your favourite Internet search engine.

Getting and Compiling LFE

You may obtain LFE via git, creating a subdirectory in the sicp directory:

cd sicp

git clone https://github.com/rvirding/lfe.git

or direct download: https://github.com/rvirding/lfe/archive/develop.zip. If you use the direct download method, move and rename the unzipped directory to match the git clone method:

cd sicp

unzip ~/Downloads/lfe-develop.zip

mv lfe-develop ./lfe

Now you can compile LFE, start the REPL, and load some code (you can safely ignore compiler warnings):

cd lfe

make

cd ../

./lfe/bin/lfe

Loading the Chapter Code

Erlang/OTP 17 [erts-6.3] [source] [64-bit] [smp:4:4] ...

LFE Shell V6.3 (abort with ^G)

> (run "ch1/code.lfe")

Loading Chapter 1 code ...

Chapter 1 loaded.

ok

Quick check to make sure that everything loaded as expected:

> (square 2)

4

Preface to the Second Edition

Is it possible that software is not like anything else, that it is meant to be discarded: that the whole point is to always see it as a soap bubble?

-- Alan J. Perlis

The material in this book has been the basis of MIT's entry-level computer science subject since 1980. We had been teaching this material for four years when the first edition was published, and twelve more years have elapsed until the appearance of this second edition. We are pleased that our work has been widely adopted and incorporated into other texts. We have seen our students take the ideas and programs in this book and build them in as the core of new computer systems and languages. In literal realization of an ancient Talmudic pun, our students have become our builders. We are lucky to have such capable students and such accomplished builders.

In preparing this edition, we have incorporated hundreds of clarifications suggested by our own teaching experience and the comments of colleagues at MIT and elsewhere. We have redesigned most of the major programming systems in the book, including the generic-arithmetic system, the interpreters, the register-machine simulator, and the compiler; and we have rewritten all the program examples to ensure that any Scheme implementation conforming to the IEEE Scheme standard (IEEE 1990) will be able to run the code.

This edition emphasizes several new themes. The most important of these is the central role played by different approaches to dealing with time in computational models: objects with state, concurrent programming, functional programming, lazy evaluation, and nondeterministic programming. We have included new sections on concurrency and nondeterminism, and we have tried to integrate this theme throughout the book.

The first edition of the book closely followed the syllabus of our MIT one-semester subject. With all the new material in the second edition, it will not be possible to cover everything in a single semester, so the instructor will have to pick and choose. In our own teaching, we sometimes skip the section on logic programming (section 13.4, Logic Programming), we have students use the register-machine simulator but we do not cover its implementation (section 14.2, A Register-Machine Simulator), and we give only a cursory overview of the compiler (section 14.5, Compilation). Even so, this is still an intense course. Some instructors may wish to cover only the first three or four chapters, leaving the other material for subsequent courses.

The World-Wide-Web site http://www-mitpress.mit.edu/sicp provides support for users of this book. This includes programs from the book, sample programming assignments, supplementary materials, and downloadable implementations of the Scheme dialect of Lisp.

Preface to the First Edition

"A computer is like a violin. You can imagine a novice trying first a phonograph and then a violin. The latter, he says, sounds terrible. That is the argument we have heard from our humanists and most of our computer scientists. Computer programs are good, they say, for particular purposes, but they aren't flexible. Neither is a violin, or a typewriter, until you learn how to use it."

-- Marvin Minsky, "Why Programming Is a Good Medium for Expressing Poorly-Understood and Sloppily-Formulated Ideas"

"The Structure and Interpretation of Computer Programs" is the entry-level subject in computer science at the Massachusetts Institute of Technology. It is required of all students at MIT who major in electrical engineering or in computer science, as one-fourth of the "common core curriculum," which also includes two subjects on circuits and linear systems and a subject on the design of digital systems. We have been involved in the development of this subject since 1978, and we have taught this material in its present form since the fall of 1980 to between 600 and 700 students each year. Most of these students have had little or no prior formal training in computation, although many have played with computers a bit and a few have had extensive programming or hardware-design experience.

Our design of this introductory computer-science subject reflects two major concerns. First, we want to establish the idea that a computer language is not just a way of getting a computer to perform operations but rather that it is a novel formal medium for expressing ideas about methodology. Thus, programs must be written for people to read, and only incidentally for machines to execute. Second, we believe that the essential material to be addressed by a subject at this level is not the syntax of particular programming-language constructs, nor clever algorithms for computing particular functions efficiently, nor even the mathematical analysis of algorithms and the foundations of computing, but rather the techniques used to control the intellectual complexity of large software systems.

Our goal is that students who complete this subject should have a good feel for the elements of style and the aesthetics of programming. They should have command of the major techniques for controlling complexity in a large system. They should be capable of reading a 50-page-long program, if it is written in an exemplary style. They should know what not to read, and what they need not understand at any moment. They should feel secure about modifying a program, retaining the spirit and style of the original author.

These skills are by no means unique to computer programming. The techniques we teach and draw upon are common to all of engineering design. We control complexity by building abstractions that hide details when appropriate. We control complexity by establishing conventional interfaces that enable us to construct systems by combining standard, well-understood pieces in a ``mix and match'' way. We control complexity by establishing new languages for describing a design, each of which emphasizes particular aspects of the design and deemphasizes others.

Underlying our approach to this subject is our conviction that "computer science" is not a science and that its significance has little to do with computers. The computer revolution is a revolution in the way we think and in the way we express what we think. The essence of this change is the emergence of what might best be called procedural epistemology -- the study of the structure of knowledge from an imperative point of view, as opposed to the more declarative point of view taken by classical mathematical subjects. Mathematics provides a framework for dealing precisely with notions of "what is." Computation provides a framework for dealing precisely with notions of "how to."

In teaching our material we use a dialect of the programming language Lisp. We never formally teach the language, because we don't have to. We just use it, and students pick it up in a few days. This is one great advantage of Lisp-like languages: They have very few ways of forming compound expressions, and almost no syntactic structure. All of the formal properties can be covered in an hour, like the rules of chess. After a short time we forget about syntactic details of the language (because there are none) and get on with the real issues -- figuring out what we want to compute, how we will decompose problems into manageable parts, and how we will work on the parts. Another advantage of Lisp is that it supports (but does not enforce) more of the large-scale strategies for modular decomposition of programs than any other language we know. We can make procedural and data abstractions, we can use higher-order functions to capture common patterns of usage, we can model local state using assignment and data mutation, we can link parts of a program with streams and delayed evaluation, and we can easily implement embedded languages. All of this is embedded in an interactive environment with excellent support for incremental program design, construction, testing, and debugging. We thank all the generations of Lisp wizards, starting with John McCarthy, who have fashioned a fine tool of unprecedented power and elegance.

Scheme, the dialect of Lisp that we use, is an attempt to bring together the power and elegance of Lisp and Algol. From Lisp we take the metalinguistic power that derives from the simple syntax, the uniform representation of programs as data objects, and the garbage-collected heap-allocated data. From Algol we take lexical scoping and block structure, which are gifts from the pioneers of programming-language design who were on the Algol committee. We wish to cite John Reynolds and Peter Landin for their insights into the relationship of Church's lambda calculus to the structure of programming languages. We also recognize our debt to the mathematicians who scouted out this territory decades before computers appeared on the scene. These pioneers include Alonzo Church, Barkley Rosser, Stephen Kleene, and Haskell Curry.

Acknowledgments

We would like to thank the many people who have helped us develop this book and this curriculum.

Our subject is a clear intellectual descendant of "6.231," a wonderful subject on programming linguistics and the lambda calculus taught at MIT in the late 1960s by Jack Wozencraft and Arthur Evans, Jr.

We owe a great debt to Robert Fano, who reorganized MIT's introductory curriculum in electrical engineering and computer science to emphasize the principles of engineering design. He led us in starting out on this enterprise and wrote the first set of subject notes from which this book evolved.

Much of the style and aesthetics of programming that we try to teach were developed in conjunction with Guy Lewis Steele Jr., who collaborated with Gerald Jay Sussman in the initial development of the Scheme language. In addition, David Turner, Peter Henderson, Dan Friedman, David Wise, and Will Clinger have taught us many of the techniques of the functional programming community that appear in this book.

Joel Moses taught us about structuring large systems. His experience with the Macsyma system for symbolic computation provided the insight that one should avoid complexities of control and concentrate on organizing the data to reflect the real structure of the world being modeled.

Marvin Minsky and Seymour Papert formed many of our attitudes about programming and its place in our intellectual lives. To them we owe the understanding that computation provides a means of expression for exploring ideas that would otherwise be too complex to deal with precisely. They emphasize that a student's ability to write and modify programs provides a powerful medium in which exploring becomes a natural activity.

We also strongly agree with Alan Perlis that programming is lots of fun and we had better be careful to support the joy of programming. Part of this joy derives from observing great masters at work. We are fortunate to have been apprentice programmers at the feet of Bill Gosper and Richard Greenblatt.

It is difficult to identify all the people who have contributed to the development of our curriculum. We thank all the lecturers, recitation instructors, and tutors who have worked with us over the past fifteen years and put in many extra hours on our subject, especially Bill Siebert, Albert Meyer, Joe Stoy, Randy Davis, Louis Braida, Eric Grimson, Rod Brooks, Lynn Stein, and Peter Szolovits. We would like to specially acknowledge the outstanding teaching contributions of Franklyn Turbak, now at Wellesley; his work in undergraduate instruction set a standard that we can all aspire to. We are grateful to Jerry Saltzer and Jim Miller for helping us grapple with the mysteries of concurrency, and to Peter Szolovits and David McAllester for their contributions to the exposition of nondeterministic evaluation in chapter 4.

Many people have put in significant effort presenting this material at other universities. Some of the people we have worked closely with are Jacob Katzenelson at the Technion, Hardy Mayer at the University of California at Irvine, Joe Stoy at Oxford, Elisha Sacks at Purdue, and Jan Komorowski at the Norwegian University of Science and Technology. We are exceptionally proud of our colleagues who have received major teaching awards for their adaptations of this subject at other universities, including Kenneth Yip at Yale, Brian Harvey at the University of California at Berkeley, and Dan Huttenlocher at Cornell.

Al Moyé arranged for us to teach this material to engineers at Hewlett-Packard, and for the production of videotapes of these lectures. We would like to thank the talented instructors -- in particular Jim Miller, Bill Siebert, and Mike Eisenberg -- who have designed continuing education courses incorporating these tapes and taught them at universities and industry all over the world.

Many educators in other countries have put in significant work translating the first edition. Michel Briand, Pierre Chamard, and André Pic produced a French edition; Susanne Daniels-Herold produced a German edition; and Fumio Motoyoshi produced a Japanese edition. We do not know who produced the Chinese edition, but we consider it an honor to have been selected as the subject of an ``unauthorized'' translation.

It is hard to enumerate all the people who have made technical contributions to the development of the Scheme systems we use for instructional purposes. In addition to Guy Steele, principal wizards have included Chris Hanson, Joe Bowbeer, Jim Miller, Guillermo Rozas, and Stephen Adams. Others who have put in significant time are Richard Stallman, Alan Bawden, Kent Pitman, Jon Taft, Neil Mayle, John Lamping, Gwyn Osnos, Tracy Larrabee, George Carrette, Soma Chaudhuri, Bill Chiarchiaro, Steven Kirsch, Leigh Klotz, Wayne Noss, Todd Cass, Patrick O'Donnell, Kevin Theobald, Daniel Weise, Kenneth Sinclair, Anthony Courtemanche, Henry M. Wu, Andrew Berlin, and Ruth Shyu.

Beyond the MIT implementation, we would like to thank the many people who worked on the IEEE Scheme standard, including William Clinger and Jonathan Rees, who edited the R4RS, and Chris Haynes, David Bartley, Chris Hanson, and Jim Miller, who prepared the IEEE standard.

Dan Friedman has been a long-time leader of the Scheme community. The community's broader work goes beyond issues of language design to encompass significant educational innovations, such as the high-school curriculum based on EdScheme by Schemer's Inc., and the wonderful books by Mike Eisenberg and by Brian Harvey and Matthew Wright.

We appreciate the work of those who contributed to making this a real book, especially Terry Ehling, Larry Cohen, and Paul Bethge at the MIT Press. Ella Mazel found the wonderful cover image. For the second edition we are particularly grateful to Bernard and Ella Mazel for help with the book design, and to David Jones, TEX wizard extraordinaire. We also are indebted to those readers who made penetrating comments on the new draft: Jacob Katzenelson, Hardy Mayer, Jim Miller, and especially Brian Harvey, who did unto this book as Julie did unto his book Simply Scheme.

Finally, we would like to acknowledge the support of the organizations that have encouraged this work over the years, including support from Hewlett-Packard, made possible by Ira Goldstein and Joel Birnbaum, and support from DARPA, made possible by Bob Kahn.

Chapter 1

Building Abstractions with Functions

The acts of the mind, wherein it exerts its power over simple ideas, are chiefly these three: 1. Combining several simple ideas into one compound one, and thus all complex ideas are made. 2. The second is bringing two ideas, whether simple or complex, together, and setting them by one another so as to take a view of them at once, without uniting them into one, by which it gets all its ideas of relations. 3. The third is separating them from all other ideas that accompany them in their real existence: this is called abstraction, and thus all its general ideas are made.

-- John Locke, An Essay Concerning Human Understanding (1690)

We are about to study the idea of a computational process. Computational processes are abstract beings that inhabit computers. As they evolve, processes manipulate other abstract things called data. The evolution of a process is directed by a pattern of rules called a program. People create programs to direct processes. In effect, we conjure the spirits of the computer with our spells.

A computational process is indeed much like a sorcerer's idea of a spirit. It cannot be seen or touched. It is not composed of matter at all. However, it is very real. It can perform intellectual work. It can answer questions. It can affect the world by disbursing money at a bank or by controlling a robot arm in a factory. The programs we use to conjure processes are like a sorcerer's spells. They are carefully composed from symbolic expressions in arcane and esoteric programming languages that prescribe the tasks we want our processes to perform.

A computational process, in a correctly working computer, executes programs precisely and accurately. Thus, like the sorcerer's apprentice, novice programmers must learn to understand and to anticipate the consequences of their conjuring. Even small errors (usually called bugs or glitches) in programs can have complex and unanticipated consequences.

Fortunately, learning to program is considerably less dangerous than learning sorcery, because the spirits we deal with are conveniently contained in a secure way. Real-world programming, however, requires care, expertise, and wisdom. A small bug in a computer-aided design program, for example, can lead to the catastrophic collapse of an airplane or a dam or the self-destruction of an industrial robot.

Master software engineers have the ability to organize programs so that they can be reasonably sure that the resulting processes will perform the tasks intended. They can visualize the behavior of their systems in advance. They know how to structure programs so that unanticipated problems do not lead to catastrophic consequences, and when problems do arise, they can debug their programs. Well-designed computational systems, like well-designed automobiles or nuclear reactors, are designed in a modular manner, so that the parts can be constructed, replaced, and debugged separately.

Programming in Lisp

We need an appropriate language for describing processes, and we will use for this purpose the programming language Lisp. Just as our everyday thoughts are usually expressed in our natural language (such as English, French, or Japanese), and descriptions of quantitative phenomena are expressed with mathematical notations, our procedural thoughts will be expressed in Lisp. Lisp was invented in the late 1950s as a formalism for reasoning about the use of certain kinds of logical expressions, called recursion equations, as a model for computation. The language was conceived by John McCarthy and is based on his paper "Recursive Functions of Symbolic Expressions and Their Computation by Machine" (McCarthy 1960).

Despite its inception as a mathematical formalism, Lisp is a practical programming language. A Lisp interpreter is a machine that carries out processes described in the Lisp language. The first Lisp interpreter was implemented by McCarthy with the help of colleagues and students in the Artificial Intelligence Group of the MIT Research Laboratory of Electronics and in the MIT Computation Center.1 Lisp, whose name is an acronym for LISt Processing, was designed to provide symbol-manipulating capabilities for attacking programming problems such as the symbolic differentiation and integration of algebraic expressions. It included for this purpose new data objects known as atoms and lists, which most strikingly set it apart from all other languages of the period.

Lisp was not the product of a concerted design effort. Instead, it evolved informally in an experimental manner in response to users' needs and to pragmatic implementation considerations. Lisp's informal evolution has continued through the years, and the community of Lisp users has traditionally resisted attempts to promulgate any "official" definition of the language. This evolution, together with the flexibility and elegance of the initial conception, has enabled Lisp, which is the second oldest language in widespread use today (only Fortran is older), to continually adapt to encompass the most modern ideas about program design. Thus, Lisp is by now a family of dialects, which, while sharing most of the original features, may differ from one another in significant ways. The dialect of Lisp used in the first two editions of this book was Scheme.2 The dialect used in this edition of the book is Lisp Flavored Erlang.3

Because of its experimental character and its emphasis on symbol manipulation, Lisp was at first very inefficient for numerical computations, at least in comparison with Fortran. Over the years, however, Lisp compilers have been developed that translate programs into machine code that can perform numerical computations reasonably efficiently. And for special applications, Lisp has been used with great effectiveness.4 Although Lisp has not yet overcome its old reputation as hopelessly inefficient, Lisp is now used in many applications where efficiency is not the central concern. For example, Lisp has become a language of choice for operating-system shell languages and for extension languages for editors and computer-aided design systems.

If Lisp is not a mainstream language, why are we using it as the framework for our discussion of programming? Because the language possesses unique features that make it an excellent medium for studying important programming constructs and data structures and for relating them to the linguistic features that support them. The most significant of these features is the fact that Lisp descriptions of processes, called functions, can themselves be represented and manipulated as Lisp data. The importance of this is that there are powerful program-design techniques that rely on the ability to blur the traditional distinction between "passive" data and "active" processes. As we shall discover, Lisp's flexibility in handling functions as data makes it one of the most convenient languages in existence for exploring these techniques. The ability to represent functions as data also makes Lisp an excellent language for writing programs that must manipulate other programs as data, such as the interpreters and compilers that support computer languages. Above and beyond these considerations, programming in Lisp is great fun.

The Lisp 1 Programmer's Manual appeared in 1960, and the Lisp 1.5 Programmer's Manual (McCarthy 1965) was published in 1962. The early history of Lisp is described in McCarthy 1978.

The two dialects in which most major Lisp programs of the 1970s were written are MacLisp (Moon 1978; Pitman 1983), developed at the MIT Project MAC, and Interlisp (Teitelman 1974), developed at Bolt Beranek and Newman Inc. and the Xerox Palo Alto Research Center. Portable Standard Lisp (Hearn 1969; Griss 1981) was a Lisp dialect designed to be easily portable between different machines. MacLisp spawned a number of subdialects, such as Franz Lisp, which was developed at the University of California at Berkeley, and Zetalisp (Moon 1981), which was based on a special-purpose processor designed at the MIT Artificial Intelligence Laboratory to run Lisp very efficiently. The Lisp dialect used in the first two editions of this book, called Scheme (Steele 1975), was invented in 1975 by Guy Lewis Steele Jr. and Gerald Jay Sussman of the MIT Artificial Intelligence Laboratory and later reimplemented for instructional use at MIT. Scheme became an IEEE standard in 1990 (IEEE 1990). The Common Lisp dialect (Steele 1982, Steele 1990) was developed by the Lisp community to combine features from the earlier Lisp dialects to make an industrial standard for Lisp. Common Lisp became an ANSI standard in 1994 (ANSI 1994).

Lisp Flavored Erlang, more commonly referred to as LFE, is a Common Lisp (and Scheme) inspired variant created to run on the Erlang BEAM (also known as the "Erlang virtual machine"). LFE was created by Robert Virding (second co-creator of the Erlang programming language, the first being Joe Armstrong) in late 2007 in the same spirit that Alan Perlis describes in the dedication of this book: fun. The first version was released in early 2008. It received steady development work over the course of the following four years, and then in 2012 and 2013 began to see an up-tick in community interest, contributions, and third-party libraries. By 2014, companies were starting to use it on projects and in 2015 start ups began selecting it as their platform of choice for distributed systems programming.

One such special application was a breakthrough computation of scientific importance -- an integration of the motion of the Solar System that extended previous results by nearly two orders of magnitude, and demonstrated that the dynamics of the Solar System is chaotic. This computation was made possible by new integration algorithms, a special-purpose compiler, and a special-purpose computer all implemented with the aid of software tools written in Lisp (Abelson et al. 1992; Sussman and Wisdom 1992).

The Elements of Programming

A powerful programming language is more than just a means for instructing a computer to perform tasks. The language also serves as a framework within which we organize our ideas about processes. Thus, when we describe a language, we should pay particular attention to the means that the language provides for combining simple ideas to form more complex ideas. Every powerful language has three mechanisms for accomplishing this:

-

primitive expressions, which represent the simplest entities the language is concerned with,

-

means of combination, by which compound elements are built from simpler ones, and

-

means of abstraction, by which compound elements can be named and manipulated as units.

In programming, we deal with two kinds of elements: functions and data. (Later we will discover that they are really not so distinct.) Informally, data is "stuff" that we want to manipulate, and functions are descriptions of the rules for manipulating the data. Thus, any powerful programming language should be able to describe primitive data and primitive functions and should have methods for combining and abstracting functions and data.

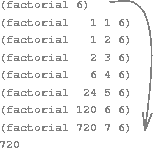

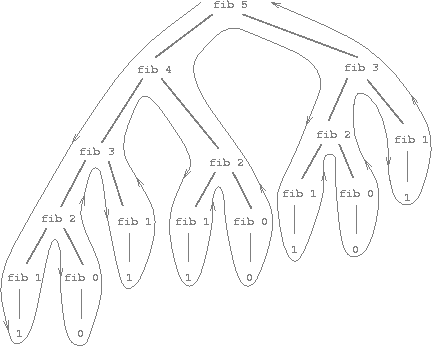

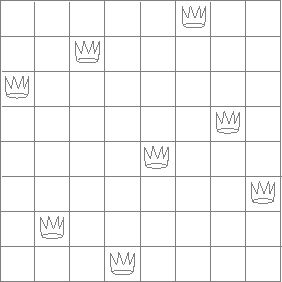

In this chapter we will deal only with simple numerical data so that we can focus on the rules for building functions.1 In later chapters we will see that these same rules allow us to build functions to manipulate compound data as well.