LFE MACHINE MANUAL

Adatped from multiple sources

by Duncan McGreggor and Robert Virding

Published by Cowboys 'N' Beans Books

https://github.com/cnbbooks ◈ http://cnbb.pub/ ◈ info@cnbb.pub

First electronic edition published: 2020

Portions © 1974, David Moon

Portions © 1978-1981, Daniel Weinreb and David Moon

Portions © 1979-1984, Douglas Adams

Portions © 1983, Kent Pitman

Portions © 1992-1993, Peter Norvig and Kent Pitman

Portions © 2003-2020, Ericsson AB

Portions © 2008-2012, Robert Virding

Portions © 2010-2020, Steve Klabnik and Carol Nichols

Portions © 2013-2023, Robert Virding and Duncan McGreggor

This work is licensed under a Creative Commons Attribution-ShareAlike 4.0 International License

About the Cover

The LFE "Chinenual" cover is based upon the Lisp Machine Manual covers of the early 80s. The Lisp Machine Manual editions we have seen from 1977 and 1979 had only hand-typed title pages, no covers, so we're not sure if the famous graphic/typographic design occurred any earlier than 1982. We've also been unable to discover who the original designer was, but would love to give them credit, should we find out.

The Original

The Software Preservation Group has this image on their site:

Bitsavers has a 3rd edition of the Chinenual with the full cover

The LFE Edition

Whole Cover

Back Cover

The Spine

Dedication

To all LFE Community members, Lispers, programmers as well as all our friends and families.

Preface

The original Lisp Machine Manual, the direct spiritiaul ancestor of the LFE Machine Manual, described both the language and the "operating system" of the Lisp Machine. The language was a dialect of Lisp called Zetalisp. Zetalisp was a direct descendant of MACLISP, created as a systems programming language for the MIT Lisp machines. This is of special note since Erlang was created as a systems programming language too. One of it's co-creators, Robert Virding, created Lisp Flavoured Erlang (LFE) based upon his expereinces with Franz Lisp (which based largely upon MACLISP), Portable Standard Lisp (itself an experiment in systems programming), and ultimately in an implementation he made of Lisp Machine Flavors on top of VAX/VMS where he extensively utilized the Lisp Machine Manual.

As such, LFE has a very strong inheritance of systems programming from both parents, as it were. First and foremost, it is a BEAM language written on top of the Erlang VM and from which it strays very little. Secondly, it is a Lisp dialect. It is, however, entirely a systems programming language.

Which brings us back to Zetalisp and the Lisp Machine Manual. It seemed only fitting to base the LFE manual upon the fantastic work and docuentation that was done on Lisp systems programming in the 70s and 80s, work that so many of us treasure and adore and to which we still defer. Thus the machine that is OTP in the context and syntax of the LFE Lisp dialect is extensively documented in the LFE MACHINE MANUAL.

Forward

The original Lisp programming language was implemented nearly 65 years ago1 at MIT (Massachusetts Institute of Technology), as part of the work done by John McCarthy and Marvin Minsky at the then-nascent AI (Artificial Intelligence) Lab. In the intervening half-century, the original Lisp evolved and experienced significant transformation in a technological diaspora originally fuelled by an explosion of research in the field of artificial intelligence. Through this, the industry witnessed 40 years of development where countless independent Lisp implementations were created. A small sampling of these number such favourites as Lisp 1.5, MacLisp, ZetaLisp, Scheme, Common Lisp, and ISLISP. However, the early 1990s saw the beginning of what would become the AI winter, and Lisp declined into obscurity – even notoriety – as graduating computer scientists were ushered into the “enterprise” world of Java, never to look back.

Except some did. By the early to mid-2000s, groundwork was being laid for what is now being recognized as a “Lisp renaissance.” The rediscovery of Lisp in the new millennium has led to the creation of a whole new collection of dialects, many implemented on top of other languages: Clojure on Java; LFE (Lisp Flavoured Erlang) and Joxa on Erlang; Hy on Python; Gherkin on Bash. New languages such as Go and Rust have several Lisp implementations, while JavaScript seems to gain a new Lisp every few years. While all of these ultimately owe their existence to the first Lisp, conceived in 19562 and defined in 19583, they represent a new breed and a new techno-ecological niche for Lisp: bringing the power of meta-programming and the clarity of near syntaxlessness4 to established language platforms. Whereas in the past Lisp has been an either-or choice, the new era of Lisps represents a symbiotic relationship between Lisp and the language platforms or VMs (virtual machines) upon which they are implemented; you now can have both, without leaving behind the accumulated experiences and comfort of your preferred platform.

Just as the Lisp implementations of the 1960s were greatly impacted by the advent of time-sharing computer systems, the new Lisps mentioned above like Clojure and LFE have been deeply influenced not only by their underlying virtual machines, but – more importantly – by the world view which the creators and maintainers of those VMs espoused. For the Erlang ecosystem of BEAM (Bogdan/Björn's Erlang Abstract Machine) languages, the dominant world view is the primacy of highly-concurrent, fault-tolerant, soft real-time, distributed systems. Erlang was created with these requirements in mind, and LFE inherits this in full. As such, LFE is more than a new Lisp; it is a language of power designed for creating robust services and systems. This point bears some discussion in order to properly prepare the intrepid programming language enthusiast who wishes to travel through the dimensions of LFE.

Unlike languages whose prototypical users were developers working in an interactive shell engaged in such tasks as solving math problems, Erlang's prototypical “user” was a telecommunications device in a world where downtime was simply unacceptable. As such, Erlang's requirements and constraints were very unusual when compared to most other programming languages of its generation.5 Erlang, and thus its constellation of dialects, was designed from the start to be a programming language for building distributed systems, one where applications created with it could survive network and systems catastrophes, millions of processes could be sustained on a single machine, where tens and hundreds of thousands of simultaneous network connections could be supported, where even a live, production deployment could have its code updated without downtime. Such is the backdrop against which the Erlang side of the LFE story unfolds – not only in the teaching and learning of it, but in its day-to-day use, and over time, in the minds of its developers.

When bringing new developers up to speed, this perspective is often overlooked or set aside for later. This is often done intentionally, since one doesn't want to overwhelm or discourage a newcomer by throwing them into the “deep end” of distributed systems theory and the critical minutia of reliability. However, if we ignore the strengths of LFE when teaching it, we do our members as well as ourselves a disservice that leads to much misunderstanding and frustration: “Why is LFE so different? You can do X so much more simply in language Y”. It should be stated quite clearly in all introductory materials that the BEAM languages are not like other programming languages; in many ways, the less you rely upon your previous experiences with C, Java, Python, Ruby, etc., the better off you will be.

When compared to mainstream programming languages, Erlang's development is akin to the divergent evolution of animals on a continent which has been completely isolated for hundreds of millions of years. For instance, programming languages have their “Hello, world”s and their “first project”s. These are like lap dogs for newcomers to the programming world, a distant and domesticated version of their far more powerful ancestors. Though each language has its own species of puppy to help new users, they are all loyal and faithful companions which share a high percentage of common genetic history: this is how you print a line, this is how you create a new project. Erlang – the Down Under of programming languages – has its “Hello, world”s and “first project”s, too. But in this case, the lapdog does not count the wolf in its ancestral line. It's not even a canid.6 It's a thylacine7 with terrifying jaws and and unfamiliar behaviours. Its “hello world” is sending messages to thousands of distributed peers and to nodes in supervised, monitored hierarchies. It has just enough familiarity to leave one feeling mystified by the differences and with the understanding that one is in the presence of something mostly alien.

That is the proper context for learning Erlang when coming from another programming language.

In LFE, we take that a step further by adding Lisp to the mix, supplementing a distributed programming language platform with the innovation laboratory that is Lisp. Far from making the learning process more difficult, this algebraic, time-honoured syntax provides an anchoring point, a home base for future exploration: it is pervasive and familiar, with very few syntactical rules to remember. We have even had reports of developers more easily learning Erlang via LFE.

In summary, by the end of this book we hope to have opened the reader's eyes to a new world of promise: distributed systems programming with a distinctly 1950s flavour – and a capacity to create applications that will thrive for another 50 years in our continually growing, decentralized technological world.

Duncan McGreggor2015, Lakefield, MN &

2023, Sleepy Eye, MN

Notes

-

The first draft of this forward which was written in 2015 said "almost 60 years ago" but was never published. Today, at the end of 2023, this content is finally seeing the light of day, with the origins of Lisp receding further into the past ... ↩

-

See McCarthy's 1979 paper History of Lisp, in particular the section entitled “LISP prehistory - Summer 1956 through Summer 1958”. ↩

-

Ibid., section “The implementation of LISP”. ↩

-

As you learn and then take advantage of Lisp's power, you will find yourself regularly creating new favourite features that LFE lacks. The author has gotten so used to this capability that he has applied this freedom to other areas of life. He hopes that you can forgive the occasional English language hack. ↩

-

In fact, in the 1980s when Erlang was born, these features were completely unheard of in mainstream languages. Even today, the combination of features Erlang/OTP (Open Telecom Platform) provides is rare; an argument can be made that Erlang (including its dialects) is still the only language which provides them all. ↩

-

The family of mammals that includes dogs, wolves, foxes, and jackals, among others. ↩

-

An extinct apex predator and marsupial also known as the Tasmanian tiger or Tasmanian wolf. ↩

Acknowledgments

Thank you Robert Virding for all that you have done to make Lisp Flavoured Erlang a reality.

Part I - Getting Started

Every programming journey begins with a single step, and in the Lisp tradition, that step is often taken at the REPL—the Read-Eval-Print Loop that transforms programming from a batch process into an interactive conversation with the computer. Part I of this manual guides you through your first steps in LFE (Lisp Flavored Erlang), from understanding what makes this language unique to building your first complete application.

LFE occupies a fascinating position in the programming language landscape. It brings the elegant syntax and powerful abstractions of Lisp to the robust, fault-tolerant world of the Erlang Virtual Machine (BEAM). This combination offers something remarkable: a language that supports both the reflective, code-as-data philosophy of Lisp and the "let it crash" resilience of actor-model concurrency. Whether you're drawn to LFE from the Lisp side or the Erlang side, you'll discover that this synthesis creates new possibilities for building distributed, fault-tolerant systems.

We begin with the traditional "Hello, World!" programs, but in three distinct flavors that showcase LFE's versatility. The REPL version demonstrates immediate interactivity—type an expression, see the result instantly. The main function approach shows how to create standalone programs, while the LFE/OTP version introduces you to the powerful supervision trees and process management that make Erlang applications famously reliable.

The heart of Part I is the guessing game walk-through, a complete project that evolves from simple procedural code to a proper (if very simple) OTP application. This progression mirrors the journey many LFE programs take: starting as interactive explorations at the REPL, growing into functions and modules, and ultimately becoming supervised processes that can handle failures gracefully and scale across multiple machines.

Finally, we dive deep into the REPL itself—not just as a place to test code fragments, but as a powerful development environment. The REPL is where LFE's dual nature as both Lisp and Erlang becomes most apparent. You can manipulate code as data using traditional Lisp techniques, while simultaneously spawning processes, sending messages, and exploring the vast ecosystem of Erlang and OTP libraries.

By the end of Part I, you'll have not just written your first LFE programs, but developed an intuition for the LFE way of thinking—interactive, incremental, and designed for systems that never stop running. You'll understand how to leverage both the immediate feedback of Lisp development and the industrial-strength reliability of the BEAM platform, setting the foundation for the deeper explorations that follow in the subsequent parts of this manual.

Introduction

Far out in the uncharted backwaters of the unfashionable end of computer science known as "distributed systems programming" lies a small red e. Orbitting this at a distance roughly proportional to the inverse of the likelihood of it being noticed is an utterly insignificant little green mug filled with the morning beverage stimulant equivalent of That Old Janx Spirit. Upon that liquid floats a little yellow 𝛌 whose adherents are so amazingly primitive that they still think cons, car, and cdr are pretty neat ideas.

This is their book.

Their language, Lisp Flavoured Erlang (henceforth "LFE"), lets you use the archaic and much-beloved S-expressions to write some of the most advanced software on the planet. LFE is a general-purpose, concurrent, functional Lisp whose underlying virtual machine (Erlang) was designed to create distributed, fault-tolerant, soft-realtime, highly-availale, always-up, hot-swappable appliances, applications, and services. In addition to fashionable digital watches, LFE sports immutable data, pattern-matching, eager evaluation, and dynamic typing.

This manual will not only teach you what all of that means and why you want it in your breakfast cereal, but also: how to create LFE programs; what exactly are the basic elements of the language; the ins-and-outs of extremely efficient and beautiful clients and servers; and much, much more.

Note, however, that the first chapter is a little different than most other books, and is in fact different from the rest of the chapters in this manual. We wrote this chapter with two guiding thoughts: firstly and foremost, we wanted to provide some practical examples of code-in-action as a context in which a programmer new to LFE could continuously refer -- from the very beginning through to the successful end -- while learning the general principles of that language; secondly, most programming language manuals are dry references to individual pieces of a language or system, not representatives of the whole, and we wanted to provide a starting place for those who learn well via examples, who would benefit from a view toward that whole. For those who have already seen plenty of LFE examples and would rather get to down to the nitty-gritty, rest assured we desire your experience to be guilt-free and thus encourage you to jump into next chapter immediately!

This book is divided into 6 parts with the following foci:

- Introductory material

- Core data types and capabilities

- The basics of LFE code and projects

- Advanced language features

- The machine that is OTP

- Concluding thoughts and summaries

There is a lot of material in this book, so just take it section by section. If at any time you feel overwhelmed, simply set down the book, take a deep breath, fix yourself a cuppa, and don't panic.

Welcome to the LFE MACHINE MANUAL, the definitive LFE reference.

About LFE

LFE is a modern programming language of two lineages, as indicated by the expansion of its acronym: Lisp Flavoured Erlang. In this book we aim to provide the reader with a comprehensive reference for LFE and will therefore explore both parental lines. Though the two language branches which ultimately merged in LFE are separated by nearly 30 years, and while LFE was created another 20 years after that, our story of their origins reveals that age simply doesn't matter. More significantly, LFE has unified Lisp and Erlang with a grace that is both simple and natural. This chapter is a historical overview on how Lisp, Erlang, and ultimately LFE came to be, thus providing a broad context for a complete learning experience.

What Is LFE?

LFE is a Lisp dialect which is heavily flavoured by the programming language virtual machine upon which it rests, the Erlang VM.1 Lisps are members of a programming language family whose typical distinguishing visual characteristic is the heavy use of parentheses and what is called prefix notation.2 To give you a visual sense of the LFE language, here is some example code:

(defun remsp

(('())

'())

(((cons #\ tail))

(remsp tail))

(((cons head tail))

(cons head (remsp tail))))

This function removes spaces from a string that it passed to it. We will postpone explanation and analysis of this code, but in a few chapters you will have the knowledge necessary to understand this bit of LFE.

Besides the parentheses and prefix notation, other substantial features which LFE shares with Lisp languages include the interchangeability of data with code and the ability to write code which generates new code using the same syntax as the rest of the language. Examples of other Lisps include Common Lisp, Scheme, and Clojure.

Erlang, on the other hand, is a language inspired most significantly by Prolog and whose virtual machine supports not only Erlang and LFE, but also newer BEAM languages including Joxa, Elixir, and Erlog. BEAM languages tend to focus on the key characteristics of their shared VM: fault-tolerance, massive scalability, and the ability to easily build soft real-time systems.

One way of describing LFE is as a programming language which unites these two. LFE is a happy mix of the serious, fault-tolerant philosophy of Erlang combined with the flexibility and extensibility offered by Lisp dialects. It is a homoiconic distributed systems programming language with Lisp macros which you will soon come to know in much greater detail.

A Brief History

To more fully understand the nature of LFE, we need to know more about Lisp and Erlang – how they came to be and even more importantly, how they are used today. To do this we will cast our view back in time: first we'll look at Lisp, then we'll review the circumstances of Erlang's genesis, and finally conclude the section with LFE's beginnings.

The Origins of Lisp

Lisp, originally spelled LISP, is an acronym for "LISt Processor". The language's creator, John McCarthy, was inspired by the work of his colleagues who in 1956 created IPL (Information Processing Language), an assembly programming language based upon the idea of manipulating lists. Though initially intrigued with their creation, McCarthy's interests in artificial intelligence required a higher-level language than IPL with a more general application of its list-manipulating features. So after much experimentation with FORTRAN, IPL, and heavy inspiration from Alonzo Church's lambda calculus,3 McCarthy created the first version of Lisp in 1958.

In the 1950s and 1960s programming languages were actually created on paper, due to limited computational resources. Volunteers, grad students, and even the children of language creators were used to simulate registers and operations of the language. This is how the first version of Lisp was “run”.4 Furthermore, there were two kinds of Lisp: students in the AI lab wrote a form called S-expressions, which was eventually input into an actual computer. These instructions had the form of nested lists, the syntax that eventually became synonymous with Lisp. The other form, called M-expressions, was used when McCarthy gave lectures or presented papers.5 These had a syntax which more closely resembles what programmers expect. This separation was natural at the time: for over a decade programmers had entered instructions using binary or machine language while describing these efforts in papers using natural language or pseudocode. McCarthy's students programmed entirely in S-expressions and as their use grew in popularity, the fate of M-expressions was sealed: they were never implemented.6

The Lisp 1.5 programmer's manual, first published in 1962, used M-expressions extensively to introduce and explain the language. Here is an example function7 for removing spaces from a string input defined using M-expressions:

remsp[string] = [null[string]→F;

eq[car[string];" "]→member[cdr[string]];

T→cons[car[string];remsp[cdr[string]]]]

The corresponding S-expression is what the Lisp programmer would actually enter into the IBM 704 machine that was used by the AI lab at MIT:8

DEFINE ((

(REMSP (LAMBDA (STRING)

(COND ((NULL STRING)

F)

((EQ (CAR STRING) " ")

(REMSP (CDR STRING)))

(T

(CONS (CAR STRING)

(REMSP (CDR STRING)))))))))

The period from 1958 to 1962, when Lisp 1.5 was released, marked the beginning of a new era in computer science. Since then Lisp dialects have made an extraordinary impact on the design and theory of other programming languages, changing the face of computing history perhaps more than any other language group. Language features that Lisp pioneered include such significant examples as: homoiconicity, conditional expressions, recursion, meta-programming, meta-circular evaluation, automatic garbage collection, and first class functions. A classic synopsis of these accomplishments was made by the computer scientist of great renown, Edsger Dijkstra in his 1972 Turing Award lecture, where he said the following about Lisp:

“With a few very basic principles at its foundation, [Lisp] has shown a remarkable stability. Besides that, Lisp has been the carrier for a considerable number of, in a sense, our most sophisticated computer applications. Lisp has jokingly been described as ‘the most intelligent way to misuse a computer’. I think that description a great compliment because it transmits the full flavour of liberation: it has assisted a number of our most gifted fellow humans in thinking previously impossible thoughts.”

Lisp usage is generally described as peaking in the 80s and early 90s, experiencing an adoption setback with the widespread view that problems in artificial intelligence were far more difficult to solve than originally anticipated.9 Another problem which faced Lisp was related hardware requirements: specialized architectures were developed in order to provide sufficient computational power to its users. These were expensive with few vendors, and a slow product cycle.

In the midst of this Lisp cold-spell, two seminal Lisp books were published: On Lisp, and ANSI Common Lisp, both by famed entrepreneur Paul Graham.10 Despite a decade of decline, these events helped catalyse a new appreciation for the language by a younger generation of programmers and within a few short years, the number of Lisp books and Lisp-based languages began growing, giving the world the likes of Practical Common Lisp and Let Over Lambda in the case of the former, and Clojure and LFE, in the case of the latter.

Constructing Erlang

Erlang was born in the heart of Ericsson's Computer Science Laboratory,11 just outside of Stockholm, Sweden.12 The lab had the general aim “to make Ericsson software activities as efficient as possible through the purposeful exploitation of modern technology.” The Erlang programming language was the lab's crowning achievement, but the effort leading up to this was extensive with a great many people engaged in the creation of many prototypes and the use of numerous of programming languages.

One example of this is the work that Nabiel Elshiewy and Robert Virding did in 1986 with Parlog, a concurrent logic programming language based on Prolog. Though this work was eventually abandoned, you can read the paper The Phoning Philosopher's Problem or Logic Programming for Telecommunications Applications and see the impact its features had on the future development of Erlang. The paper provides some examples of Parlog usage; using that for inspiration we can envision what our space-removing program would looking like:13

remsp([]) :-

[].

remsp([$ |Tail]) :-

remsp(Tail).

remsp([Head|Tail]) :-

[Head|remsp(Tail)].

Another language that was part of this department-wide experimentation was Smalltalk. Joe Armstrong started experimenting with it in 1985 to model a telephone exchanges and used this to develop a telephony algebra with it. A year later, when his colleague Roger Skagervall showed him the equivalence between this and logic programming, Prolog began its rise to prominence, and the first steps were made towards the syntax of Erlang as the world now knows it. In modern Erlang, our program has the following form:

remsp([]) ->

[];

remsp([$ |Tail]) ->

remsp(Tail);

remsp([Head|Tail]) ->

[Head|remsp(Tail)].

The members of the Ericsson lab who were tasked with building the next generation telephone exchange system, and thus involved with the various language experiments made over the course of a few years, came to the following conclusions:

- Small languages seemed better at succinctly addressing the problem space.

- The functional programming paradigm was appreciated, if sometimes viewed as awkward.

- Logic programming provided the most elegant solutions in the given problem space.

- Support for concurrency was viewed as essential.

If these were the initial guideposts for Erlang development, its guiding principles were the following:

- To handle high-concurrency

- To handle soft real-time constraints

- To support non-local, distributed computing

- To enable hardware interaction

- To support very large scale software systems

- To support complex interactions

- To provide non-stop operation

- To allow for system updates without downtime

- To allow engineers to create systems with only seconds of down-time per year

- To easily adapt to faults in both hardware and software

These were accomplished using such features as immutable data structures, light weight processes, no shared memory, message passing, supervision trees, and heartbeats. Furthermore, having adopted message-passing as the means of providing high-concurrency, Erlang slowly evolved into the exemplar of a programming paradigm that it essentially invented and even today, dominates: concurrent functional programming.

After four years of development from the late 80s into the early 90s, Erlang matured to the point where it was adopted for large projects inside Ericsson. In 1998 it was released as open source software, and has since seen growing adoption in the wider world of network- and systems-oriented programming.

The Birth of LFE

One of the co-inventors of Erlang, and part of the Lab's early efforts in language experimentation was Robert Virding. Virding first encountered Lisp in 1980 when he started his PhD in theoretical physics at Stockholm University. His exposure to the language came as a result of the physics department's use in performing symbolic algebraic computations. Despite this, he spent more time working on micro-processor programming and didn't dive into it until a few years later when he was working at Ericsson's Computer Science Laboratory. One of the languages evaluated for use in building telephony software was Lisp, but to do so properly required getting to know it in-depth – both a the language level as well as the operating system level.14 It was in this work that Virding's passion for Lisp blossomed and he came to appreciate deeply its functional nature, macros, and homoiconicity – all excellent and time-saving tools for building complicated systems.

Though the work on Lisps did not become the focus of Erlang development, the seeds of LFE were planted even before Erlang itself had come to be. After 20 years of contributions to the Erlang programming language, these began to bear fruit. In 2007 Virding decided to do something fun in his down time: to see what a Lisp would look like if written on top of the Prolog-inspired Erlang VM. After several months of hacking, he announced a first version of LFE to the Erlang mail list in early 2008.

A few years latter, when asked about the origins of LFE and the motivating elements behind his decision to start the project, Virding shared the following on the LFE mail list:

- It had always been a goal of Robert's to make a Lisp which could fully interact with Erlang/OTP, to see what it would look like and how it would run.

- He was looking for some interesting programming projects that were not too large to do in his spare time.

- He thought it would be a fun, open-ended problem to solve with many interesting parts.

- He likes implementing languages.

We showed an example of LFE at the beginning of this chapter; in keeping with our theme for each language subsection, we present it here again, though in a slightly altered form:

(defun remsp

(('())

'())

((`(32 . ,tail))

(remsp tail))

((`(,head . ,tail))

(cons head (remsp tail))))

What is LFE Good For?

Very few languages have the powerful capabilities which Erlang offers – both in its standard library as well as the set of Erlang libraries, frameworks, and patterns that are provided in OTP. This covers everything from fault-tolerance, scalability, soft real time capacity, and high-availability to proper design, component assembly, and deployment in distributed environments.

Similarly, despite the impact that Lisp has had on so many programming languages, its full suite of features is still essentially limited to Lisp dialects. This includes the features we have already mentioned: the ability to treat code as data, easily generate new code from data, as well as the interrelated power of writing macros – the last allows developers to modify the language to suit their needs. These rare features from two different language branches are unified in LFE and there is no well-established language that provides the union of these.

As such, LFE gives developers everything they need to envision, prototype, and then build distributed applications – ones with unique requirements that no platform provides and which can be delivered thanks to LFE's language-building capabilities.

To paraphrase and augment the opening of Chapter 1 in Designing for Scalability with Erlang/OTP:

“You need to implement a fault tolerant, scalable soft real time system with requirements for high availability. It has to be event driven and react to external stimulus, load and failure. It must always be responsive. You also need language-level features that don't exist yet. You would like to encode your domain's best practices and design patterns seamlessly into your chosen platform.”

LFE has everything you need to realize this dream ... and so much more.

In Summary

What LFE Is

Here's what you can expect of LFE:

- A proper Lisp-2, based on the features and limitations of the Erlang VM

- Compatibility with vanilla Erlang and OTP

- It runs on the standard Erlang VM

Furthermore, as a result of Erlang's influence (and LFE's compatibility with it), the following hold:

- there is no global data

- data is not mutable

- only the standard Erlang data types are used

- you get pattern matching and guards

- you have access to Erlang functions and modules

- LFE has a compiler/interpreter

- functions with declared arity and fixed number of arguments

- Lisp macros

What LFE Isn't

Just to clear the air and set some expectations, we'll go a step further. Here's what you're not going to find in LFE:

- An implementation of Scheme

- An implementation of Common Lisp

- An implementation of Clojure

As such, you will not find the following:

- A Scheme-like single namespace

- CL packages or munged names faking packages

- Access to Java libraries

Notes

-

Robert Virding, the creator of LFE and one of the co-creators of the Erlang programming language, has previously stated that, were he to start again, he would name his Lisp dialect EFL, since it truly is a Lisp with an Erlang flavour, rather than the other way round. ↩

-

We will be covering prefix notation when we cover symbolic expressions later in the book. ↩

-

Alonzo Church was one of McCarthy's professors at Princeton. McCarthy did not use all of the lambda calculus when creating Lisp, as there were many esoteric aspects for which he had no practical need. ↩

-

In the case of Lisp, university students were the primary computer hardware ... and sometimes even high school students (see REPL footnote below). ↩

-

This approach was not uncommon at the time: the ALGOL 58 specification defined a syntax for the language reference, one for publications, and a third for implementation. ↩

-

The single greatest contributor to the ascendance of the S-expression is probably the invention of the REPL by L Peter Deutsch, which allowed for interactive Lisp programming. This was almost trivial in S-expressions, whereas a great deal of effort would have been required to support a similar functionality for M-expressions. ↩

-

The function we use in this chapter to demonstrate various syntaxes and dialects was copied from the cover of Byte Magazine's August 1979 issue which focused on Lisp and had part of a Lisp 1.5 program on its cover. ↩

-

The formatting applied to the S-expression version of the function is a modern convention, added here for improved readability. There was originally no formatting, since there was no display – a keypunch was used to enter text on punchcards, 80 characters at a time. As such, a more historically accurate representation would perhaps be:

DEFINE (((REMSP (LAMBDA (STRING) (COND ((NULL STRING) F) ((EQ (CAR STRING) " ") (REMSP (CDR STRING))) (T (CONS (CAR STRING) (REMSP (CDR STRING)))))))))↩ -

This time period is commonly referred to as the “AI winter”. ↩

-

Paul Graham sold his Lisp-based e-commerce startup to Yahoo! In 1998. ↩

-

The majority of this section's content was adapted from Joe Armstrong's paper “A History of Erlang” by, written for the HOPL III conference in 2007. ↩

-

The Computer Science Laboratory operated from 1982 to 2002 in Älvsjö, Stockholm. ↩

-

We've taken the liberty of envisioning the Parlog of 1986 as one that supported pattern matching on characters. ↩

-

One of Virding's project aims was to gain a deeper understanding of Lisp internals. As part of this, he ported the Lisp Machine Lisp object framework Flavors to Portable Standard Lisp running on UNIX. His work on this project contributed to his decision to use Flavour as part of the name for LFE (spelling divergence intentional). ↩

Prerequisites

Anyone coming to LFE should have experience programming in another language, ideally a systems programming language, especially if that language was found lacking. If the corageous reader is attmping to use LFE as a means of entering the study of computer science, we might offer several other paths of study which may bear fruit more quickly and with less pain.

No prior Lisp experience is required, but that would certinaly be helpful. The same goes for Erlang/OTP (or any of the suite of BEAM languages). The reader with experience writing concurrent applications, wrestling with fault-tolerance, or maintaining highly-available applications and services does receive bonus points for preparedness. Such well-prepared readers landing here may have, in fact, done so due to a quest for a distributed Lisp. For those whom this does apply, your quest has found its happy end.

This book assumes the reader has the following installed upon their system:

- a package manager for easily installing software (in particular, development tools and supporting libraries)

git,make, and other core open source software development tools- a modern version of Erlang (as of the writing of this book, that would include versions 19 through 23); the rebar3 documentation has great suggestions on what to use here, depending upon your need

- the

rebar3build tool for Erlang (and other BEAM languages); see its docs for installation instructions

Conventions

Typography

Key Entry

We use the angle bracket convention to indicate typing actual key on the keyboard. For instance, when the reader sees <ENTER> they should interpret this as an actual key they should type. Note that all keys are given in upper-case. If the reader is expected to use an upper-case "C" instead of a lower-case "c", they will be presented with the key combination <SHIFT><C>.

Code

Color syntax highlighting is used in this text to display blocks of code. The formatting of this display is done in such a way as to invoke in the mind of the reader the feeling of a terminal, thus making an obvious visual distinction in the text. For instance:

(defun fib

((0) 0)

((1) 1)

((n)

(+ (fib (- n 1))

(fib (- n 2)))))

Examples such as this one are readily copied and may be pasted without edit into a file or even the LFE REPL itself.

For interactive code, we display the default LFE prompt the reader will see when in the REPL:

lfe> (integer_to_list 42 2)

;; "101010"

We also distinguish the output from the entered LFE code using code comments displayed afer the command.

For shell commands, the commands to enter at the prompt are prefixed by a $ for the prompt. Input and any relevant output are provided as comment strings:

$ echo "I am excited to learn LFE"

# I am excited to learn LFE

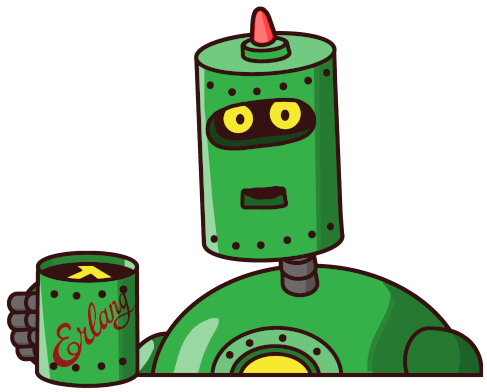

LiffyBot

This is a severly hoopy frood. With an attitude. He pops up from time to time, generally with good advice. Or simply as a marker for something the authors hope you will pay special note.

This is a severly hoopy frood. With an attitude. He pops up from time to time, generally with good advice. Or simply as a marker for something the authors hope you will pay special note.

Messages of Note

From time to time you will see call-out boxes, aimed at drawing your attention to something of note. There are four differnt types of these:

- ones that share useful info (blue)

- ones that highlight something of a momentus nature (green)

- ones that offer warnings to tred carefully (orange)

- ones that beg you not to follow a particular path (red)

These messages will take the following forms:

Information

Here you will see a message of general interest that could have a useful or even positive impact on your experience in programming LFE.

The icon associated with this type of message is the "i" in a circle.

Amazing!

Amazing!

Here you will see a message of general celebration for sitations that warrant it, above and beyond the general celebration you will feel writing programs in a distributed Lisp.

The icon assocated with this type of message is that of LiffyBot.

Warning!

Here you will see a message indicating a known isssue or practice you should avoid if possible.

The icon assocated with this type of message is the "!" in a caution triangle.

Danger!

Here you will see a message indicating something that could endanger the proper function of an LFE system or threaten the very existence of the universe itself.

The icon assocated with this type of message is "do not enter".

Development Setup

rebar3 Configuration

Having followed the notes and linked instructions in the Prerequisites section, you are ready to add global support for the LFE rebar3 plugin.

First, unless you have configured other rebar3 plugins on your system, you will need to create the configuration directory and the configuration file:

$ mkdir ~/.config/rebar3

$ touch ~/.config/rebar3/rebar.config

Next, open up that file in your favourite editor, and give it these contents:

{plugins, [

{rebar3_lfe, "0.4.8"}

]}.

If you already have a rebar.config file with a plugins entry, then simply add a comma after the last plugin listed and paste the {rebar3_lfe, ...} line from above (with no trailing comma!). When a new version of rebar3_lfe is released, you can follow the instructions in the rebar3_lfe repo to upgrade.

For Windows users

Some notes on compatibility:

While LFE, Erlang, and rebar3 work on *NIX, BSD, and Windows systems, much of the development the community does occurs predominently on the first two and sometimes Windows support is spotty and less smooth than on the more used platforms (this is more true for rebar3 and LFE, and _most_ true for LFE).

In particular, starting a REPL in Windows can take a little more effort (an extra step or two) than it does on, for example, Linux and Mac OS X machines.

A Quick Test with the REPL

With the LFE rebar3 plugin successfully configured, you should be able to start up the LFE REPL anywhere on your system with the following:

$ rebar3 lfe repl

Erlang/OTP 23 [erts-11.0] [source] [64-bit] [smp:16:16] [ds:16:16:10] [async-threads:1] [hipe]

..-~.~_~---..

( \\ ) | A Lisp-2+ on the Erlang VM

|`-.._/_\\_.-': | Type (help) for usage info.

| g |_ \ |

| n | | | Docs: http://docs.lfe.io/

| a / / | Source: http://github.com/rvirding/lfe

\ l |_/ |

\ r / | LFE v1.3-dev (abort with ^G)

`-E___.-'

lfe>

Exit out of the REPL for now by typing <CTRL><G> and then <Q>.

For Windows users

On Windows, this currently puts you into the Erlang shell, not the LFE REPL. To continue to the LFE REPL, you will need to enter lfe_shell:server(). and then press <ENTER>.

'Hello, World!'

Hello-World style introductory programs are intended to give the prospective programmer for that language a sense of what it is like to write a minimalist piece of software with the language in question. In particular, it should show off the minimum capabilitiues of the language. Practically, this type of program should sigify to the curious coder what they could be in for, should they decide upon this particular path.

In the case of LFE/OTP, a standard Hello-World program (essentially a "print" statement) is extremely misleading; more on that in the OTP version of the Hello-World program. Regardless, we concede to conventional practice and produce a minimal Hello-World that does what many other languages' Hello-World programs do. We do, however, go further afterwards ...

From the REPL

As previously demonstrated, it is possible to start up the LFE 'read-eval-print loop' (REPL) using rebar3:

$ rebar3 lfe repl

Once you are at the LFE prompt, you may write a simple LFE "program" like the following:

lfe> (io:format "~p~n" (list "Hello, World!"))

Or, for the terminally lazy:

lfe> (io:format "~p~n" '("Hello, World!"))

While technically a program, it is not a very interesting one: we didn't create a function of our own, nor did we run it from outside the LFE interactive programming environment.

Let's address one of those points right now. Try this:

lfe> (defun hello-world ()

lfe> (io:format "~p~n" '("Hello, World!")))

This is a simple function definition in LFE.

We can run it by calling it:

lfe> (hello-world)

;; "Hello, World!"

;; ok

When we execute our hello-world function, it prints our message to standard-output and then lets us know everything really quite fine with a friendly ok.

Note

LFE displays `ok` as output for functions that do not return a value.

Now let's address the other point: running a Hello-World programming from outside LFE.

Hit <CTRL-G><Q> to exit the REPL and get back to your terminal.

From the Command Line

From your system shell prompt, run the following to create a new project that will let us run a Hello-World program from the command line:

$ rebar3 new lfe-main hello-world

$ cd ./hello-world

Once in the project directory, you can actually just do this:

$ rebar3 lfe run

You will see code getting downloaded and compiled, and then your script will run, generating the following output:

Running script '/usr/local/bin/rebar3' with args [] ...

'hello-world

When you created a new LFE project of type 'main', a Hello-World function was automatically generated for you, one that's even simpler than what we created in the previous section:

(defun my-fun ()

'hello-world)

The other code that was created when we executed rebar3 new lfe-main hello-world was a script meant to be used by LFE with LFE acting as a shell interpreter:

#!/usr/bin/env lfescript

(defun main (args)

(let ((script-name (escript:script_name)))

(io:format "Running script '~s' with args ~p ...~n" `(,script-name ,args))

(io:format "~p~n" `(,(hello-world:my-fun)))))

You may be wondering about the args argument to the main function, and the fact that the printed output for the args when we ran this was []. Let's try something:

$ rebar3 lfe run -- Fenchurch 42

Running script '/usr/local/bin/rebar3' with args [<<"Fenchurch">>,<<"42">>] ...

'hello-world'

We have to provide the two dashes to let rebar3 know that we're done with it, that the subsequent argsuments are not for it, but rather for the program we want it to start for us. Using it causes everything after the -- to be passed as arguments to our script.

As for the code itself, it's tiny. But there is a lot going on just with these two files. Have no fear, though: the remainder of this book will explore all of that and more. For now, know that the main function in the executable is calling the hello-world module's my-fun function, which takes no arguments. To put another way, what we really have here is a tiny, trivial library project with the addition of a script that calls a function from that library.

For now just know that an executable file which starts with #!/usr/bin/env lfescript and contains a main function accepting one argument is an LFE script capable of being executed from the command line -- as we have shown!

LFE/OTP 'Hello, World!'

What have been demonstrated so far are fairly vanilla Hello-World examples; there's nothing particularly interesting about them, which puts them solidly iin the company of the millions of other Hello-World programs. As mentioned before, this approach is particularly vexing in the case of LFE/OTP, since it lures the prospective developer into the preconception that BEAM languages are just like other programming languages. They most decidedly are not.

What makes them, and in this particular case LFE, special is OTP. There's nothing quite like it, certainly not another language with OTP's feature set baked into its heart and soul. Most useful applications you will write in LFE/OTP will be composed of some sort of long-running service or server, something that manages that server and restarts it in the event of errors, and lastly, a context that contains both -- usually referred to as the "application" itself.

As such, a real Hello-World in LFE would be honest and let the prospective developer know what they are in for (and what power will be placed at their fingertips). That is what we will show now, an LFE OTP Hello-World example.

If you are still in the directory of the previous Hello-World project, let's get out of that:

cd ../

Now we're going to create a new project, one utilising the some very basic OTP patterns:

rebar3 new lfe-app hello-otp-world

cd ./hello-otp-world

We won't look at the code for this right now, since there are chapters dedicated to that in the second half of the book. But let's brush the surface with a quick run in the REPL:

rebar3 lfe repl

To start your new hello-world application, use the OTP application module:

lfe> (application:ensure_all_started 'hello-otp-world)

;; #(ok (hello-otp-world))

That message lets you know that not only was the hello-otp-word application and server started without issue, any applications upon which it depends were also started. Furthermore, there is a supervisor for our server, and it has started as well. Should our Hello-World server crash for any reason, the supervisor will restart it.

To finish the demonstration, and display the clichéd if classic message:

(hello-otp-world:echo "Hello, OTP World!")

;; "Hello, OTP World!"

And that, dear reader, is a true LFE/OTP Hello-World program, complete with message-passing and pattern-matching!

Feel free to poke around in the code that was generated for you, but know that eventually all its mysteries will be revealed, and by the end of this book, that program's magic will just seem like ordinary code to you, ordinary, dependable, fault-tolerant, highly-availble, massively-concurrent code.

Walk-through: An LFE Guessing Game

Now that you've seen some LFE in action, let's do something completely insane: write a whole game before we even know the language!

We will follow the same patterns established in the Hello-World examples, so if you are still in one of the Hello-World projects, change directory and then create a new LFE project:

$ cd ../

$ rebar3 new lfe-app guessing-game

$ cd ./guessing-game

We will create this game by exploring functions in the REPL and then saving the results in a file. Open up your generated project in your favourite code-editing application, and then open up a terminal from your new project directory, and start the REPL:

$ rebar3 lfe repl

Planning the Game

We've created our new project, but before we write even a single atom of code, let's take a moment to think about the problem and come up with a nice solution. By doing this, we increase our chances of making something both useful and elegant. As long as what we write remains legible and meets our needs, the less we write the better. This sort of practice elegance will make the code easier to maintain and reduce the chance for bugs (by the simple merrit of there being less code in which a bug may arise; the more code, the greater opportunities for bugs).

Our first step will be making sure we understand the problem and devising some minimal abstractions. Next, we'll think about what actually need to happen in the game. With that in hand, we will know what state we need to track. Then, we're off to the races: all the code will fall right into place and we'll get to play our game.

Key Abstractions

In a guessing game, there are two players: one who has the answer, and one who seeks the answer. Our code and data should clearly model these two players.

Actions

The player with the answer needs to peform the following actions:

- At the beginning of the game, state the problem and tell the other player to start guessing

- Receive the other player's guess

- Check the guess against the answer

- Report back to the other player on the provided guess

- End the game if the guess was correct

The guessing player needs to take only one action:

- guess!

State

We need to track the state of the game. Based upon the actions we've examined, the overall state is very simple. Through the course of the game, will only need to preserve the answer that will be guessed.

Code Explore

Now that we've thought through our problem space clearly and cleanly, let's do some code exploration and start defining some functions we think we'll need.

We've already generated an OTP application using the LFE rebar3 plugin, and once we've got our collection of functions that address the needed game features, we can plug those into the application.

We'll make those changes in the code editor you've opened, and we'll explore a small set of possible functions to use for this using the project REPL session you've just started.

Getting User Input

How do we get user input in LFE? Like this!

lfe> (io:fread "Guess number: " "~d")

This will print the prompt Guess number: and then await your input and the press of the <ENTER> key. The input you provide needs to match the format type given in the second argument. In this case, the ~d tells us that this needs to be a decimal (base 10) integer.

fe> (io:fread "Guess number: " "~d")

;; Guess number: 42

;; #(ok "*")

If we try typing something that is not a base 10 integer, we get an error:

lfe> (io:fread "Guess number: " "~d")

;; Guess number: forty-two

;; #(error #(fread integer))

With correct usage, how do we capture the value in a variable? The standard way to do this in LFE is destructuring via pattern matching. The following snippet extracts the value and then prints the extracted value in the REPL:

lfe> (let ((`#(ok (,value)) (io:fread "Guess number: " "~d")))

lfe> (io:format "Got: ~p~n" `(,value)))

;; Guess number: 42

;; Got: 42

;; ok

We'll talk a lot more about pattern matching in the future, as well as the meaning of the backtick and commas. For now,let's keep pottering in the REPL with these explorations, and make a function for this:

lfe> (defun guess ()

lfe> (let ((`#(ok (,value)) (io:fread "Guess number: " "~d")))

lfe> (io:format "You guessed: ~p~n" `(,value))))

And call it:

lfe> (guess)

;; Guess number: 42

;; You guessed: 42

;; ok

Checking the Input

In LFE there are several ways in which you can perform checks on values:

- the

ifform - the

condform - the

caseform - pattern-matching and/or guards in function heads

The last one is commonly used in LFE when passing messages / data between functions. Our initial, generated project code is already doing this, and given the game state data we will be working with, this feels like a good fit what we need to implement.

Normally records are used for application data, but since we just care about the value of two integers (the number selected for the answer and the number guessed by the player), we'll keep things simple in this game:

(set answer 42)

Let's create a function with a guard:

lfe> (defun check

lfe> ((guess) (when (< guess answer))

lfe> (io:format "Guess is too low~n")))

The extra parenthesis around the function's arguments is due to the use of the pattern-matching form of function definition we're using here. We need this form, since we're going to use a guard. The when after the function args is called a "guard" in LFE. As you might imagine, we could use any number of these.

lfe> (check 10)

;; Guess is too low

;; ok

Let's add some more guards for the other checks we want to perform:

lfe> (defun check

lfe> ((guess) (when (< guess answer))

lfe> (io:format "Guess is too low~n"))

lfe> ((guess) (when (> guess answer))

lfe> (io:format "Guess is too high~n"))

lfe> ((guess) (when (== guess answer))

lfe> (io:format "Correct!~n")))

lfe> (check 10)

;; Guess is too low

;; ok

lfe> (check 100)

;; Guess is too high

;; ok

lfe> (check 42)

;; Correct!

;; ok

This should give a very general sense of what is possible.

Integrating into an Application

We're only going to touch one of the files that was generated when you created the guessing-game project: ./src/guessing-game.lfe. You can ignore all the others. Once we've made all the changes summarized below, we will walk through this file at a high level, discussing the changes and how those contribute to the completion of the game.

First though, we need to reflect on the planning we just did, remembering the actions and states that we want to support. There's also another thing to consider, since we're writing this as is an always-up OTP app. With some adjustments for state magagement, it could easily be turned into something that literally millions of users could be accessing simultaneouslyi. So: how does a game that is usually implemented as a quick CLI toy get transformed in LFE/OTP such that it can be run as a server?

In short, we'll use OTP's gen_server capability ("behaviour") and the usual message-passing practices. As such, the server will need to be able to process the following messages:

#(start-game true)(create a record to track game state)#(stop-game true)(clear the game state)#(guess n)- check for guess equal to the answer

- greater than the answer, and

- less than the answer

We could have just used atoms for the first two, and done away with the complexity of using tuples for those, but symmetry is nice :-)

To create the game, we're going to need to perform the following integration tasks:

- Update the

handle_castfunction to process the commands and guards we listed above - Create API functions that cast the appropriate messages

- Update the

exportform in the module definition - Set the random seed so that the answers are different every time you start the application

handle_cast

The biggest chunk of code that needs to be changed is the handle_cast function. Since our game doesn't return values, we'll be using handle_cast. (If we needed to have data or results returned to us in the REPL, we would have used handle_call instead. Note that both are standard OTP gen_server callback functions.)

The generated project barely populates this function and the function isn't of the form that supports patten-matching (which we need here) so we will essentially be replacing what was generated. In the file ./src/guessing-game.lfe, change this:

(defun handle_cast (_msg state)

`#(noreply ,state))

to this:

(defun handle_cast

((`#(start-game true) _state)

(io:format "Guess the number I have chosen, between 1 and 10.~n")

`#(noreply ,(random:uniform 10)))

((`#(stop-game true) _state)

(io:format "Game over~n")

'#(noreply undefined))

((`#(guess ,n) answer) (when (== n answer))

(io:format "Well-guessed!!~n")

(stop-game)

'#(noreply undefined))

((`#(guess ,n) answer) (when (> n answer))

(io:format "Your guess is too high.~n")

`#(noreply ,answer))

((`#(guess ,n) answer) (when (< n answer))

(io:format "Your guess is too low.~n")

`#(noreply ,answer))

((_msg state)

`#(noreply ,state)))

That is a single function in LFE, since for every match the arity of the function remains the same. It is, however, a function with six different and separate arguement-body forms: one for each pattern and/or guard.

These patterns are matched:

- start

- stop

- guess (three times)

- any

For the three guess patterns (well, one pattern, really) since there are three different guards we want placed on them:

- guess is equal

- guess is greater

- guess is less

Note that the pattern for the function argument in these last three didn't change, only the guard is different between them.

Finally, there's the original "pass-through" or "match-any" pattern (this is used to prevent an error in the event of an unexpected message type).

Game API

In order to send a message to a running OTP server, you use special OTP functions for the type of server you are running. Our game is running a gen_server so we'll be using that OTP module to send messages, in particular we'll be calling gen_server:cast. However, creating messages and sending them via the appropriate gen_server function can get tedious quickly, so it is common practice to create API functions that do these things for you.

In our case, we want to go to the section with the heading ;;; our server API and add the following:

(defun start-game ()

(gen_server:cast (SERVER) '#(start-game true)))

(defun stop-game ()

(gen_server:cast (SERVER) '#(stop-game true)))

(defun guess (n)

(gen_server:cast (SERVER) `#(guess ,n)))

Functions in LFE are private by default, so simply adding these functions doesn't make them publicly accessible. As things now stand these will not be usable outside their module; if we want to use them, e.g., from the REPL, we need to export them.

Go to the top of the guessing-game module and update the "server API" sectopm of the exports, chaning this:

;; server API

(pid 0)

(echo 1)))

to this:

;; server API

(pid 0)

(echo 1)

(start-game 0)

(stop-game 0)

(guess 1)))

The final form of your module definition should look like this:

(defmodule guessing-game

(behaviour gen_server)

(export

;; gen_server implementation

(start_link 0)

(stop 0)

;; callback implementation

(init 1)

(handle_call 3)

(handle_cast 2)

(handle_info 2)

(terminate 2)

(code_change 3)

;; server API

(pid 0)

(echo 1)

(start-game 0)

(stop-game 0)

(guess 1)))

Now our game functions are public, and we'll be able to use them from the REPL.

Finishing Touches

There is one last thing we can do to make our game more interesting. Right now, the game will work. But every time we start up the REPL and kick off a new game, the same "random" number will be selected for the answer. In order to make things interesting, we need to generate a random seed when we initialize our server.

We want to only do this once, though -- not every time the game starts, and certainly not every time a user guesses! When the LFE server supervisor starts our game server, one functions is called and called only once: init/1. That's where we want to make the change to support a better-than-default random seed.

Let's change that function:

(defun init (state)

`#(ok ,state))

to this:

(defun init (state)

(random:seed (erlang:phash2 `(,(node)))

(erlang:monotonic_time)

(erlang:unique_integer))

`#(ok ,state))

Now we're ready to play!

Playing the Game

If you are still in the REPL, quit out of it so that rebar3 can rebuild our changed module. Then start it up again:

$ rebar3 lfe repl

Once at the LFE propmpt, start up the application:

lfe> (application:ensure_all_started 'guessing-game)

With the application and all of its dependencies started, we're ready to start the game and play it through:

lfe> (guessing-game:start-game)

;; ok

;; Guess the number I have chosen, between 1 and 10.

lfe> (guessing-game:guess 10)

;; ok

;; Your guess is too high.

lfe> (guessing-game:guess 1)

;; ok

;; Your guess is too low.

lfe> (guessing-game:guess 5)

;; ok

;; Your guess is too low.

lfe> (guessing-game:guess 7)

;; ok

;; Your guess is too low.

lfe> (guessing-game:guess 8)

;; ok

;; Well-guessed!!

;; Game over

Success! You've just done something pretty amazing, if still mysterious: you've not only created your first OTP application running a generic server, you've successully run it through to completion!

Success! You've just done something pretty amazing, if still mysterious: you've not only created your first OTP application running a generic server, you've successully run it through to completion!

Until we can dive into all the details of what you've seen in this walkthrough, much of what you've just written will seem strange and maybe even overkill. For now, though, we'll mark a placeholder for those concepts: the next section will briefly review what you've done and indicate which parts of this book will provide the remaining missing pieces.

Review

We've got the whole rest of the book ahead of us to cover much of what you've seen in the sample project we've just created with our guessing game. In the coming pages, you will revisit every aspect of what you've seen so far in lots of detail with correspondingly useful instructions on these matters.

That being said, it would be unfair to not at least read through the code together and mention the high-level concepts involved. Since we only touched the code in one file, that will be the one that gets the most of our attention for this short review, but let's touch on the others here, too.

Project Files

rebar.config

This is the file you need in every LFE project you will write in order to take advantage of the features (and time-savings!) that rebar3 provides. For this project, the two important parts are:

- the entry for dependencies (only LFE in this case), and

- the plugins entry for the LFE rebar3 plugin.

Project setup will be covered in Chapter XXX, section XXX.

Source Files

The source files for our sample program in this walkthrough are for an OTP application. OTP-based projects will be covered in Chapter XXX, section XXX.

.app.src

This file is mostly used for application metadata. Most of what our app uses in this file is pretty self-explanatory. Every LFE application will have one of these in the project source code. Every LFE library and application needs this file.

guessing-game-app.lfe

This is the top-level file for our game, an OTP application. It only exports two functions: one to start the app and the other to stop it. The application is responsible for starting up whatever supervisors all your services/servers need. For this sample application, only one supervisor is needed (with a very simple supervision tree).

guessing-game-sup.lfe

This module is a little more invloved and has all the configuration and code necessary to properly set up a supervisor for our server. When something goes wrong with our server, the restart strategy defined by our supervisor will kick in and get things back up and running again. This is one of the key secrets to OTP's wizardry, and we will be covering this in great detail later.

src/guessing-game.lfe

This is the last file we'll look at, and is the one we'll cover in the most detail right now. Here's the entire content of what we created for our game:

(defmodule guessing-game

(behaviour gen_server)

(export

;; gen_server implementation

(start_link 0)

(stop 0)

;; callback implementation

(init 1)

(handle_call 3)

(handle_cast 2)

(handle_info 2)

(terminate 2)

(code_change 3)

;; server API

(pid 0)

(echo 1)

(start-game 0)

(stop-game 0)

(guess 1)))

;;; ----------------

;;; config functions

;;; ----------------

(defun SERVER () (MODULE))

(defun initial-state () '#())

(defun genserver-opts () '())

(defun unknown-command () #(error "Unknown command."))

;;; -------------------------

;;; gen_server implementation

;;; -------------------------

(defun start_link ()

(gen_server:start_link `#(local ,(SERVER))

(MODULE)

(initial-state)

(genserver-opts)))

(defun stop ()

(gen_server:call (SERVER) 'stop))

;;; -----------------------

;;; callback implementation

;;; -----------------------

(defun init (state)

(random:seed (erlang:phash2 `(,(node)))

(erlang:monotonic_time)

(erlang:unique_integer))

`#(ok ,state))

(defun handle_cast

((`#(start-game true) _state)

(io:format "Guess the number I have chosen, between 1 and 10.~n")

`#(noreply ,(random:uniform 10)))

((`#(stop-game true) _state)

(io:format "Game over~n")

'#(noreply undefined))

((`#(guess ,n) answer) (when (== n answer))

(io:format "Well-guessed!!~n")

(stop-game)

'#(noreply undefined))

((`#(guess ,n) answer) (when (> n answer))

(io:format "Your guess is too high.~n")

`#(noreply ,answer))

((`#(guess ,n) answer) (when (< n answer))

(io:format "Your guess is too low.~n")

`#(noreply ,answer))

((_msg state)

`#(noreply ,state)))

(defun handle_call

(('stop _from state)

`#(stop shutdown ok state))

((`#(echo ,msg) _from state)

`#(reply ,msg state))

((message _from state)

`#(reply ,(unknown-command) ,state)))

(defun handle_info

((`#(EXIT ,_from normal) state)

`#(noreply ,state))

((`#(EXIT ,pid ,reason) state)

(io:format "Process ~p exited! (Reason: ~p)~n" `(,pid ,reason))

`#(noreply ,state))

((_msg state)

`#(noreply ,state)))

(defun terminate (_reason _state)

'ok)

(defun code_change (_old-version state _extra)

`#(ok ,state))

;;; --------------

;;; our server API

;;; --------------

(defun pid ()

(erlang:whereis (SERVER)))

(defun echo (msg)

(gen_server:call (SERVER) `#(echo ,msg)))

(defun start-game ()

(gen_server:cast (SERVER) '#(start-game true)))

(defun stop-game ()

(gen_server:cast (SERVER) '#(stop-game true)))

(defun guess (n)

(gen_server:cast (SERVER) `#(guess ,n)))

The beginning of the file opens with a declaration of the module: not only its name, but the public functions we want to expose as part of our API. This will be covered in Chapter XXX, section XXX.

Next, we have a few constant functions. Functions are necessary here due to the fact that LFE does not have global variables. This will be covered in Chapter XXX, section XXX.

Then we define the functions that will be used as this module's implementation of a generic OTP server. There is some boilerplate here that will be discussed when we dive into LFE/OTP. This will be covered in Chapter XXX, section XXX.

After that, we define the functions that are used by the OTP machinery that will run our server. Here you see several examples of pattern matching function heads in LFE, a very powerful feature that lends itself nicely to consise and expressive code. This will be covered in Chapter XXX, section XXX.

Lastly, we define our own API. Most of these functions simply send messages to our running server. More on this in Chapter XXX, section XXX.

The LFE REPL

We briefly introduced the REPL in the first version of the Hello-World example we wrote, stating that it was an acronym for 'read-eval-print loop' and how to start it with rebar3. As an LFE developer, this is one of the primnary tools -- arguably the most powerful -- at your disposal, so we're going to do a more thorough job of introducing its capabilities in this section.

Historical Note

The first Lisp interpreter was created sometime in late 1958 by then-grad student Steve Russell after reading John McCarthy's definition of eval. He had the idea that the theoretical description provided there could actually be implemented in machine code.

In 1963 L Peter Deutsch, a high school student at the time, combined the read, eval, and print core functions to create the first REPL (or, as he termed it then, the 'READ-EVAL-PRINT cycle'). This was done as part of his successful effort to port Lisp 1.5 from the IBM 7090 to the DEC PDP-1 and is referenced briefly in a written report filed with the Digital Equipment Computer Users Society in 1964.

A basic REPL can be implemented with just four functions; such an implementation could be started with the following:

(LOOP (PRINT (EVAL (READ))))

LFE has implemented most these functions for us already (and quite robustly), but we could create our own very limited REPL (single lines with no execution context or environment) within the LFE REPL using the following convenience wrappers:

(defun read ()

(case (io:get_line "myrepl> ")

("quit\n" "quit")

(str (let ((`#(ok ,expr) (lfe_io:read_string str)))

expr))))

(defun print (result)

(lfe_io:format "~p~n" `(,result))

result)

(defun loop

(("quit")

'good-bye)

((code)

(loop (print (eval (read))))))

Now we can start our custom REPL inside the LFE REPL:

lfe> (loop (print (eval (read))))

This gives us a new prompt:

myrepl>

At this prompt we can evaluate basic LFE expressions:

myrepl> (+ 1 2 3)

;; 6

myrepl> (* 2 (lists:foldl #'+/2 0 '(1 2 3 4 5 6)))

;; 42

myrepl> quit

;; good-bye

lfe>

Note that writing an evaluator is the hard part, and we've simply re-used the LFE evaluator for this demonstration.

Now that we've explored some of the background of REPLs and Lisp interpreters, let's look more deeply into the LFE REPL and how to best take advantage of its power when using the machine that is LFE and OTP.

Core Features

In keeping with the overall herritage of LFE, its REPL is both a Lisp REPL as well as an Erlang shell. In fact, when support was added for the LFE REPL to the rebar3_lfe plugin, it utilised all of the plumbing for the Erlang shell support in rebar3.

For the developer, though, this means that the LFE REPL holds a dual set of features, multiplying the set of features available to just the Erlang shell. These features include the following support:

- Evaluation of Lisp S-expressions

- Definition of functions, completely with LFE tail-recursion support

- Definition of records and use of record-specific support functions/macros

- Creation of LFE macros via standard Lisp-2 syntax

- Macro examination and debugging with various expansion macros

- The ability to start the LFE REPL in distribution mode, complete with Erlang cookie, and thus to not only access remote LFE and Erlang nodes, but to be accessed as a remote node itself (for nodes that have been granted access

- Access to the Erlang JCL and the ability to start separate, LFE shells running concurrently

Unsupported

The following capabilities are not supported in the LFE REPL:

- Module definitions; these are a file-based feature in LFE, just as with Erlang.

Starting LFE

The lfe executable

While this book focuses upon the use of rebar3 and its LFE plugin -- due entirely to the amount of time it saves through various features it supports -- LFE may be used quite easily without it.

To use LFE and its REPL without rebar3, you'll need to clone the repo, e.g.:

cd ~/lab

git clone https://github.com/lfe/lfe.git

cd lfe

Since you have read the earlier section on dependencies, you already have Erlang, make, and your system build tools installed. As such, all you have to do is run the following to build LFE:

make

This will generate an executable in ./bin and you can start the LFE REPL by calling it:

./bin/lfe

Erlang/OTP 28 [erts-16.0] [source] [64-bit] [smp:10:10] [ds:10:10:10] [async-threads:1] [jit] [dtrace]

..-~.~_~---..

( \\ ) | A Lisp-2+ on the Erlang VM

|`-.._/_\\_.-': | Type (help) for usage info.

| g |_ \ |

| n | | | Docs: http://docs.lfe.io/

| a / / | Source: http://github.com/lfe/lfe

\ l |_/ |

\ r / | LFE v2.2.0 (abort with ^G)

`-E___.-'

lfe>

If you opt to install LFE system-wide with make install, then you can start the REPL from anywhere by simply executing lfe.

Via rebar3 lfe repl

As demonstrated earlier on several occasions, you can start the LFE REPL with the rebar3 LFE plugin (and this is what we'll do in the rest of this manual):

rebar3 lfe repl

Since you have updated your global rebar3 settings (in the "Prerequisites" section, after following the instructions on the rebar3 site), you may also start the LFE REPL from anywhere on your machine using the rebar3 command.

readline Support

The LFE REPL, being built atop the Erlang shell, can benefit from GNU Readline support to provide enhanced line editing capabilities including command history, keyboard shortcuts, and tab completion. This section covers how to enable and configure these features for optimal development experience.

Background

The GNU Readline library provides a common interface for line editing and history management across many interactive programs. Originally developed for the Bash shell, it has become the de facto standard for command-line editing in Unix-like systems.

Built-in History Support (Erlang/OTP 20+)

Starting with Erlang/OTP 20, persistent shell history is available out of the box, though it's disabled by default. This feature provides basic command history persistence across sessions.

Enabling Shell History

To enable persistent history for all Erlang-based shells (including LFE), add the following to your shell's configuration file (~/.bashrc, ~/.zshrc, etc.):

# Enable Erlang shell history

export ERL_AFLAGS="-kernel shell_history enabled"

Advanced History Configuration

You can customize the history behavior using additional kernel parameters:

# Complete history configuration

export ERL_AFLAGS="+pc unicode"

ERL_AFLAGS="$ERL_AFLAGS -kernel shell_history enabled"

ERL_AFLAGS="$ERL_AFLAGS -kernel shell_history_path '\"$HOME/.erl_history\"'"